No, China has not teamed up with the Democrats to steal the midterm elections, as some people on YouTube are claiming. Neither does Saudi Arabia.

And there’s no evidence that Pennsylvania tipped an “overwhelming amount of fraud” in 2020, or that electronic voting machines will manipulate results next week, as one conservative activist claimed in a video.

In the run-up to the midterm elections, disinformation watchdogs say they are concerned that what has been described by YouTube as an aggressive attempt to tackle disinformation on the Google platform has developed blind spots. They are particularly concerned about YouTube’s TikTok-like service that offers very short videos, as well as the platform’s Spanish-language videos.

But the situation is difficult to understand clearly, more than a dozen researchers said in interviews with The New York Times, because they have limited access to data and because watching videos is time-consuming work.

“It’s easier to do research with other forms of content,” such as text on Facebook or Twitter, said Jiore Craig, head of digital integrity at the Institute for Strategic Dialogue, or ISD, a nonprofit that fights extremism and disinformation. “That puts YouTube in a situation where they get off more easily.”

While Facebook and Twitter are closely monitored for misinformation, YouTube has often flown under the radar, despite the wide influence of the video platform. It reaches over two billion people and hosts the web’s second most popular search engine.

YouTube banned videos alleging widespread fraud in the 2020 presidential election, but it has not drafted a similar policy for the midterm elections, a move that has drawn criticism from some watchdogs.

“You don’t build a sprinkler system after the building is on fire,” said Angelo Carusone, the president of Media Matters for America, a nonprofit that monitors conservative misinformation.

A YouTube spokeswoman said the company disagreed with some of the criticism of its work fighting misinformation. “We have invested heavily in our policies and systems to ensure that we successfully fight election-related disinformation with a multi-layered approach,” spokeswoman Ivy Choi said in a statement.

The state of the 2022 midterm elections

Election Day is Tuesday, November 8.

YouTube said it has removed a number of videos flagged by The New York Times for violating its policies on spam and election integrity and has determined that other content does not violate its policies. The company also said it removed 122,000 videos containing misinformation from April to June.

“Our community guidelines prohibit misleading voters about how to vote, encourage meddling in the democratic process and falsely claim that the 2020 US election was rigged or stolen,” Ms. Choi said. “This policy applies worldwide regardless of language.”

YouTube stepped up its stance against political disinformation after the 2020 presidential election. Some YouTube creators took to the platform and livestreamed the January 6, 2021 attack on the Capitol. Within 24 hours, the company began punishing people who spread the lie that the 2020 election was stolen and revoked President Donald J. Trump’s upload rights.

YouTube has pledged $15 million to hire more than 100 additional content moderators to assist in Brazil’s midterm and presidential elections, and the company has stationed more than 10,000 moderators around the world, according to a person known. with the matter and was not authorized to discuss personnel decisions.

The company has refined its recommendation algorithm so that the platform does not suggest political videos from unverified sources to other viewers, according to another person familiar with the matter. YouTube also created an election space with dozens of officials and is preparing to quickly remove videos and live streams that violate policy on election day, the person said.

Still, researchers argued that YouTube could have been even more proactive in countering false stories that could continue to reverberate after the election.

The most prominent election-related conspiracy theory on YouTube is the baseless claim that some Americans cheated by filling drop boxes with multiple ballots. The idea came from a discredited, conspiracy-laden documentary titled “2000 Mules,” which said Mr Trump had lost re-election due to illegal drop box voting.

ISD searched YouTube Shorts and found at least a dozen examples of short videos reflecting vote-smuggling allegations of “2000 Mules,” with no warning labels that would counter misinformation or provide authoritative election information, according to links shared with The New York Times. The number of viewers of the videos varied widely, from a few dozen views to tens of thousands. Two of the videos contain links to the movie itself.

ISD found the videos through keyword searches. The list was not intended to be exhaustive, but “these Shorts were identified relatively easily, demonstrating that they remain easily accessible,” three ISD researchers wrote in a report. In some videos, men can be seen addressing the camera, in a car or at home, to promote their strong belief in the film. Other videos promote the documentary without personal comment.

The nonprofit group also looked at rivals YouTube Shorts, TikTok and Instagram Reels, and found that both had also been spreading similar types of misinformation.

Ms Craig, of ISD, said nonprofits like hers were hard at work leading up to Election Day to capture and combat disinformation left on the social media platforms of tech giants, even though they have companies billions of dollars and thousands of content moderators.

“Our teams are tense to catch up with the well-equipped entities that could do this type of work,” she said.

Although videos on YouTube Shorts are no longer than a minute, according to two people familiar with the matter, they are more difficult to review than longer videos.

The company relies on artificial intelligence to scan what people have uploaded to its platform. Some AI systems work in minutes and others in hours, looking for signs that something is wrong with the content, one of the people said. Shorter videos send out fewer signals than longer ones, the person said, so YouTube has started working on a solution that can work more effectively with its short format.

According to research and analysis by Media Matters and Equis, a nonprofit targeting the Latino community, YouTube is also struggling to curb disinformation in Spanish.

Nearly half of Latinos turn to YouTube for news weekly, more than any other social media platform, said Jacobo Licona, a researcher at Equis. And those viewers have access to a plethora of misinformation and one-sided political propaganda on the platform, he said, with Latin American influencers in countries like Mexico, Colombia and Venezuela wading into US politics.

Many of them have co-opted well-known stories, such as false claims about dead people voting in the United States, and translated them into Spanish.

In October, YouTube asked a group that tracks disinformation in Spanish on the site to access its monitoring data, according to two people familiar with the request. The company was seeking outside help in overseeing its platform, and the group was concerned that YouTube hadn’t made the necessary investments in Spanish content, they said.

YouTube said it has communicated with subject matter experts to gain more insight ahead of the midterm exams. It also said it had made significant investments in fighting harmful misinformation in several languages, including Spanish.

YouTube has several arduous processes for moderating Spanish-language videos. The company has Spanish-speaking human moderators, who help teach AI systems that also control content. One AI method involved transcribing videos and reviewing the text, one employee said. Another path was to use Google Translate to convert the transcript from Spanish to English. These methods have not always proved accurate due to idioms and jargon, the person said.

YouTube said its systems had also evaluated visual cues, metadata and on-screen text in Spanish-language videos, and its AI had been able to learn new trends, such as evolving idioms and jargon.

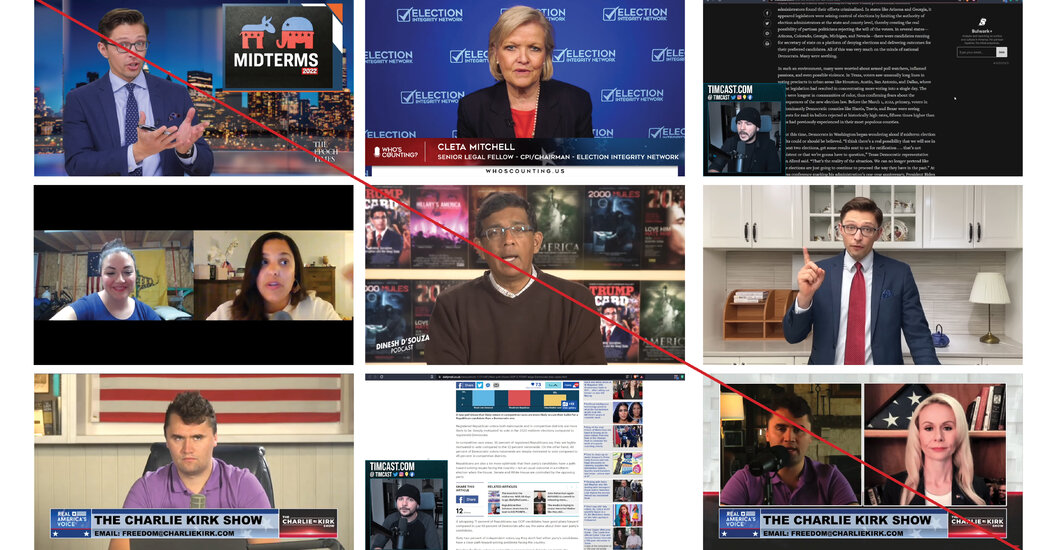

In English, researchers have found allegations of voter fraud by famous personalities with large followings, including Charlie Kirk, Dinesh D’Souza (who created “2000 Mules”) and Tim Pool, a YouTube personality with 1.3 million followers who is known for sowing doubts about the results of the 2020 elections and questioning the use of ballot boxes.

“One of the most disturbing things to me is that people who watch ballot boxes are being praised and encouraged on YouTube,” Kayla Gogarty, deputy research director at Media Matters, said in an interview. “That’s a very clear example of something going from online to offline, which can cause damage in the real world.”