In 1977 Andrew Barto, as a researcher at the University of Massachusetts, began to explore a new theory that neurons behaved like hedonists. The basic idea was that the human brain was powered by billions of nerve cells that each tried to maximize pleasure and minimize pain.

A year later he was accompanied by another young researcher, Richard Sutton. Together they worked to explain human intelligence with the help of this simple concept and applied to artificial intelligence. The result was 'reinforcement learning', a way for AI systems to learn from the digital equivalent of pleasure and pain.

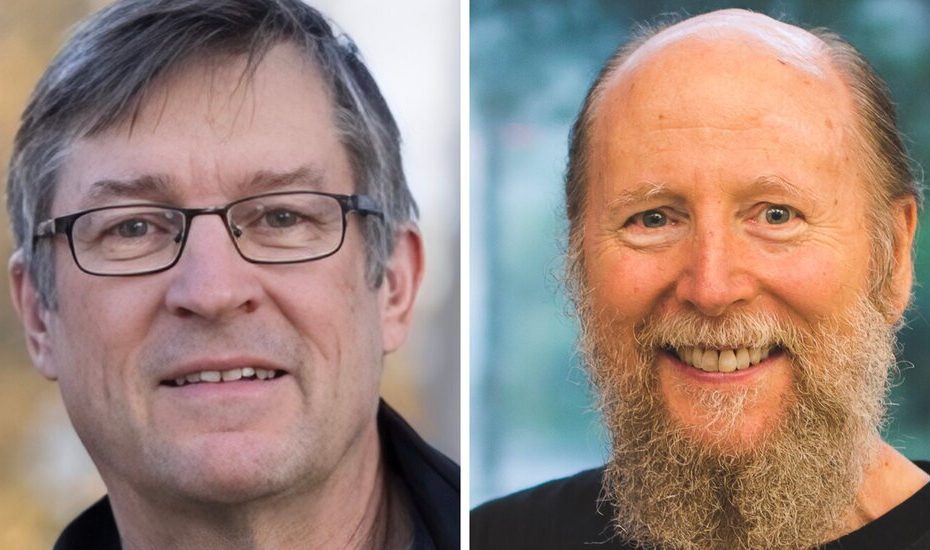

On Wednesday, the Association for Computing Machinery, the world's largest society of computer professionals, announced that Dr. Barto and Dr. This year, Sutton had won the Turing Award for their work on learning to strengthen. The Turing Award, which was introduced in 1966, is often called the Nobel Prize of computer use. The two scientists will share the price of $ 1 million that is supplied with the price.

In the past decade, reinforcement has played a crucial role in the rise of artificial intelligence, including breakthrough technologies such as Google's Alphago and OpenAi's Chatgpt. The techniques that these systems have been rooted in the work of Dr. Barto and Dr. Sutton.

“They are the undisputed pioneers of learning to strengthen,” said Oen Etzioni, an emeritus of the professor of computer science at the University of Washington and founder of the Allinstitute for Artificial Intelligence. “They have generated the most important ideas – and they wrote the book on this subject.”

Their book, “Learning: an introduction”, which was published in 1998, remains the final exploration of an idea that according to many experts only the potential starts to realize.

Psychologists have long studied the ways that people and animals learn from their experiences. In the 1940s, the groundbreaking British computer scientist Alan Turing suggested that machines could learn in almost the same way.

But they were Dr. Barto and Dr. Sutton who started exploring mathematics of how this could work, building on a theory that A. Harry Klopf, a computer scientist who worked for the government, had suggested. Dr. Barto then built a laboratory in Umass Amherst dedicated to the idea, while Dr. Sutton founded a similar type of lab at the University of Alberta in Canada.

“It's a kind of obvious idea if you're talking about people and animals,” said Dr. Sutton, who is also a research scientist at Keen Technologies, an AI-start-up and a fellow at the Alberta Machine Intelligence Institute, one of Canada's three national AI Laboratories. “As we breathe new life, it was about machines.”

This remained an academic chase until the arrival of Alphago in 2016. Most experts believed that another 10 years would pass before someone built an AI system that could beat the best players in the world in the GO game.

But during a competition in Seoul, South Korea, Alphago Lee Sedol defeated the best Go player of the past decade. The trick was that the system had played millions of games against itself, learn by trial and error. It learned what movements successful (pleasure) brought and that failed (pain).

The Google team that built the system was led by David Silver, a researcher who had studied reinforcement of reinforcement under Dr. Sutton at the University of Alberta.

Many experts are still wondering whether learning from reinforcement could work outside of games. Profit forces are determined by points, so that machines can easily be distinguished between success and failure.

But learning reinforcement has also played an essential role in online chatbots.

In the run -up to the release of Chatgpt in the fall of 2022, OpenAi held hundreds of people to use an early version and to give precise suggestions that could improve his skills. They showed the chatbot how to respond to certain questions, assessed the answers and corrected the mistakes. By analyzing those suggestions, Chatgpt learned to be a better chatbot.

Researchers call this 'reinforcement learning from human feedback' or RLHF and it is one of the main reasons that today's chatbots react to surprisingly lifelike ways.

(The New York Times has sued OpenAi and his partner, Microsoft, for infringing the copyright of news content with regard to AI systems. OpenAi and Microsoft have denied those claims.)

More recently, companies such as OpenAI and the Chinese start-up Deepseek have developed a form of reinforcement learning with which chatbots can learn from themselves-as Alphago did. By going through different mathematical problems, for example, a chatbot can learn which methods lead to the correct answer and which do not.

If it repeats this process with a huge series of problems, the bone can learn to simulate the way people reason – at least in some ways. The result is so -called reasoning systems such as OpenAi's O1 or Deepseek's R1.

Dr. Barto and Dr. Sutton say that these systems indicate the way in which machines will learn in the future. In the end they say that robots will be steeped in AI will learn from trial and error in the real world, as people and animals do.

“Learning to control a body through reinforcement – that is very natural,” said Dr. Barto.