Ars Technica

On Thursday, a few tech hobbyists released Riffusion, an AI model that generates music from text prompts by creating a visual representation of sound and converting it into audio for playback. It uses a refined version of the Stable Diffusion 1.5 image synthesis model, applying visual latent diffusion to sound processing in a new way.

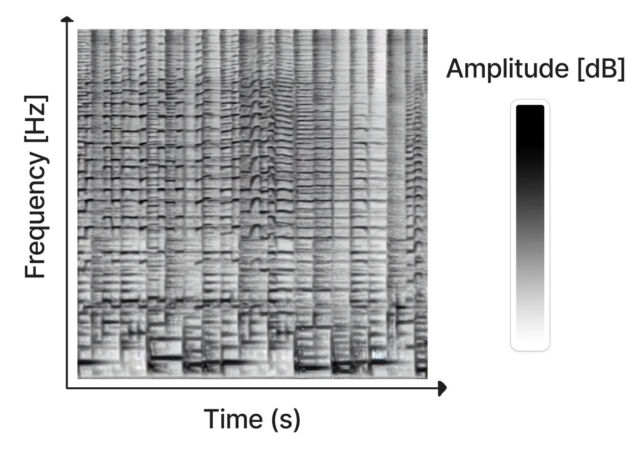

Created as a hobby project by Seth Forsgren and Hayk Martiros, Riffusion works by generating sonograms, which store audio in a two-dimensional image. In a sonogram, the X axis represents time (the order in which the frequencies are played, from left to right), and the Y axis represents the frequency of the sounds. Meanwhile, the color of each pixel in the image represents the amplitude of the sound at that moment.

Since an echo is a kind of image, Stable Diffusion can handle it. Forsgren and Martiros trained a custom Stable Diffusion model with sample ultrasounds paired with descriptions of the sounds or musical genres they represented. With that knowledge, Riffusion can generate new music on the fly based on text prompts that describe the type of music or sound you want to hear, such as “jazz,” “rock,” or even typing on a keyboard.

After generating the sonogram image, Riffusion uses Torchaudio to turn the sonogram into sound and play it back as audio.

“This is the v1.5 Stable Diffusion model with no modifications, refined only on images of spectrograms combined with text,” the creators of Riffusion write on the explanation page. “It can generate infinite variations of a prompt by varying the seed. All the same web UIs and techniques like img2img, inpainting, negative prompts and interpolation work out of the box.”

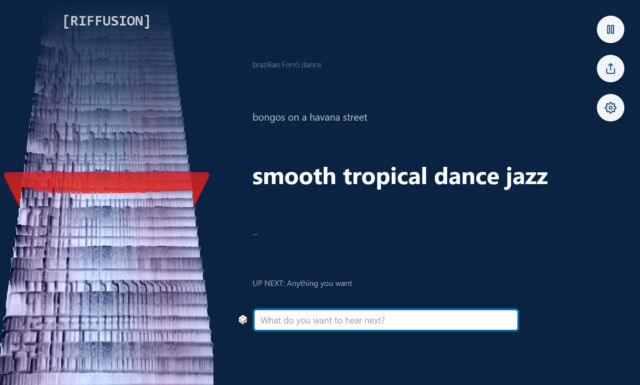

Visitors to the Riffusion website can experiment with the AI model thanks to an interactive web app that generates interpolated sonograms (stitched together smoothly for uninterrupted playback) in real time while continuously visualizing the spectrogram on the left side of the page.

It can also fuse styles. For example, typing “smooth tropical dance jazz” adds elements of different genres for a new result, encouraging experimentation by mixing styles.

Of course, Riffusion isn’t the first AI-powered music generator. Earlier this year, Harmonai released Dance Diffusion, an AI-powered generative music model. OpenAI’s Jukebox, announced in 2020, also generates new music with a neural network. And websites like Soundraw make music nonstop as they go.

Compared to those more streamlined AI music efforts, Riffusion feels more like the hobby project it is. The music it generates ranges from the interesting to the unintelligible, but it remains a remarkable application of latent diffusion technology that manipulates audio in a visual space.

The Riffusion model checkpoint and code are available on GitHub.