Benj Edwards / Ars Technica

More than once this year, AI experts have repeated a familiar refrain: “Slow down please.” AI news in 2022 was lightning fast and unforgiving; the moment you knew where things currently stand in AI, a new paper or discovery would make that notion obsolete.

By 2022, we’ve arguably reached the knee of the curve when it comes to generative AI that can produce creative works consisting of text, images, audio, and video. This year, deep learning AI emerged from a decade of research and began to make its way into commercial applications, allowing millions of people to try the technology for the first time. AI creations sparked wonder, created controversy, provoked existential crises, and garnered attention.

Here’s a look back at the seven biggest AI news stories of the year. It was hard to pick just seven, but if we didn’t cut it off somewhere, we’d still be writing about this year’s events well into 2023 and beyond.

April: DALL-E 2 dreams in focus

Open AI

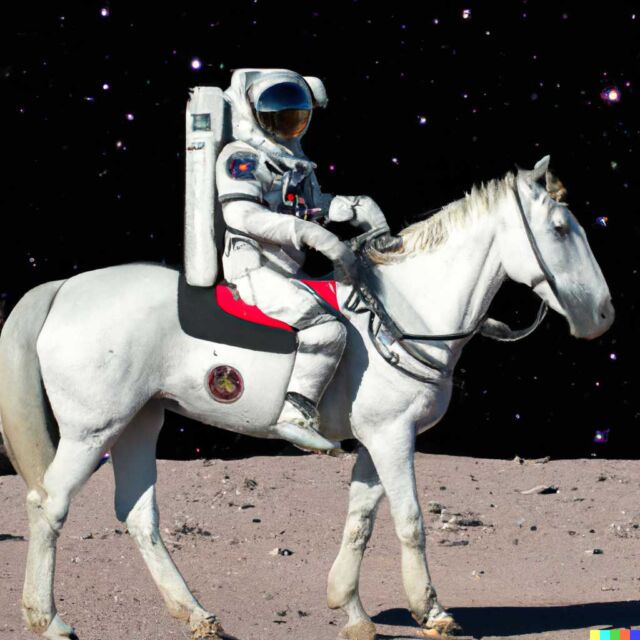

In April, OpenAI announced DALL-E 2, a deep-learning image synthesis model that boggles the mind with its seemingly magical ability to generate images from text prompts. Trained on hundreds of millions of images from the Internet, DALL-E 2 was able to create new combinations of images thanks to a technique called latent diffusion.

Twitter was soon filled with images of astronauts on horseback, teddy bears wandering through ancient Egypt, and other near-photorealistic works. We last heard about DALL-E a year earlier, when version 1 of the model had struggled to display a low-resolution avocado chair — suddenly version 2 was illustrating our wildest dreams at 1024×1024 resolution.

Due to concerns about abuse, OpenAI initially only allowed 200 beta testers to use DALL-E 2. Content filters blocked violent and sexual prompts. Gradually, OpenAI admitted more than a million people into a closed trial, and DALL-E 2 finally became available to everyone at the end of September. But by then, another contender had emerged in the world of latent diffusion, as we’ll see below.

July: Google engineer thinks LaMDA is sensitive

Getty Images | Washington Post

In early July, the Washington Post broke the news that a Google engineer named Blake Lemoine had been sent on paid leave for his belief that Google’s LaMDA (Language Model for Dialogue Applications) was sentient — and that it earned rights equal to those of a human.

While working as part of Google’s Responsible AI organization, Lemoine began talking to LaMDA about religion and philosophy and believed he saw true intelligence behind the text. “I know a person when I talk to them,” Lemoine told the Post. “It doesn’t matter if they have a flesh brain in their heads. Or if they have a billion lines of code. I talk to them. And I hear what they have to say, and that’s how I decide what’s there.” and is not a person.”

Google replied that LaMDA was only telling Lemoine what he wanted to hear and that LaMDA was essentially devoid of feeling. Like the GPT-3 text generator tool, LaMDA was previously trained on millions of books and websites. It responded to Lemoine’s input (a prompt containing the full text of the conversation) by predicting the most likely words to follow without any deeper understanding.

Along the way, Lemoine allegedly violated Google’s confidentiality policy by telling others about his group’s work. Later in July, Google fired Lemoine for violating its data security policy. He wasn’t the last person in 2022 to get caught up in the hype about an AI’s grand language model, as we’ll see.

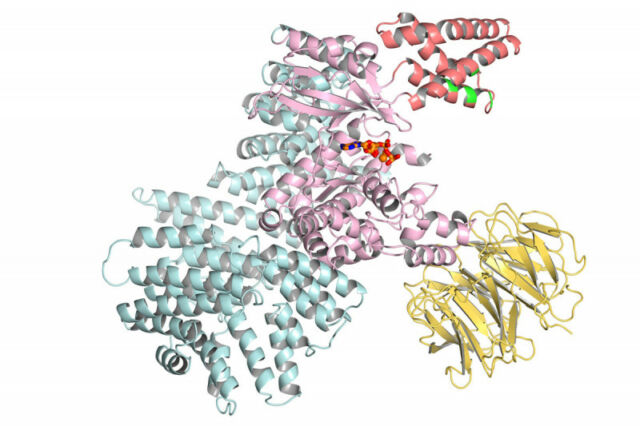

July: DeepMind AlphaFold predicts almost every known protein structure

In July, DeepMind announced that its AlphaFold AI model had predicted the shape of nearly every known protein from nearly every organism on Earth with a sequenced genome. Originally announced in summer 2021, AlphaFold had previously predicted the shape of all human proteins. But a year later, the protein database expanded to more than 200 million protein structures.

DeepMind has made these predicted protein structures available in a public database hosted by the European Bioinformatics Institute at the European Molecular Biology Laboratory (EMBL-EBI), allowing researchers around the world to access and use the data for research with relating to medicine and biological science.

Proteins are basic building blocks of life, and if scientists know their shape, scientists can control or modify them. This is especially useful when developing new medicines. “Almost every drug that has come to market in recent years has been designed in part based on knowledge of protein structures,” said Janet Thornton, senior scientist and director emeritus at EMBL-EBI. That makes it a big deal to know them all.