SAN FRANCISCO — At OpenAI, one of the world’s most ambitious artificial intelligence labs, researchers are building technology that allows you to create digital images simply by describing what you want to see.

They call it DALL-E in a nod to both “WALL-E”, the 2008 animated film about an autonomous robot, and Salvador Dalí, the surrealist painter.

OpenAI, backed by a billion dollars in funding from Microsoft, is not yet sharing the technology with the general public. But one recent afternoon, Alex Nichol, one of the researchers behind the system, demonstrated how it works.

When asked for “an avocado-shaped teapot,” typing words into a largely blank computer screen, the system created 10 different images of a dark green avocado teapot, some with pits and some without. “DALL-E is good at avocados,” says Nichol.

When he typed “cats playing chess,” he placed two fuzzy kittens on opposite sides of a checkered board, with 32 chess pieces in between. When he called out “a teddy bear playing a trumpet underwater,” one image showed tiny air bubbles rising from the tip of the bear’s trumpet to the surface of the water.

DALL-E can also edit photos. When Mr. Nichol wiped the teddy bear’s trumpet and asked for a guitar instead, a guitar appeared between the furry arms.

A team of seven researchers spent two years developing the technology, which OpenAI plans to eventually offer as a tool for people like graphic artists, providing new shortcuts and new ideas for creating and editing digital images. Computer programmers are already using Copilot, a tool based on similar technology from OpenAI, to generate snippets of software code.

But for many experts, DALL-E is worrisome. As this kind of technology continues to improve, they say, it could help spread misinformation across the Internet, fueling the kind of online campaigns that may have influenced the 2016 presidential election.

“You could use it for good things, but you could definitely use it for all sorts of other crazy, worrying uses, and that includes deep fakes,” such as misleading photos and videos, said Subbarao Kambhampati, a professor of computer science at the state of Arizona. University.

Half a decade ago, the world’s leading AI labs built systems that could identify objects in digital images and even generate images of their own, including flowers, dogs, cars and faces. A few years later, they built systems that could do much the same with written language, summarizing articles, answering questions, generating tweets, and even writing blog posts.

Now researchers are combining those technologies to create new forms of AI. DALL-E is a remarkable step forward as it juggles both language and images and in some cases understands the relationship between the two.

“We can now use multiple, intersecting streams of information to create ever-improving technology,” said Oren Etzioni, chief executive of the Allen Institute for Artificial Intelligence, an artificial intelligence lab in Seattle.

The technology is not perfect. When Mr Nichol asked DALL-E to “put the Eiffel Tower on the moon”, he didn’t quite get the idea. It put the moon in the sky above the tower. When he asked for “a living room full of sand,” the scene was more like a construction site than a living room.

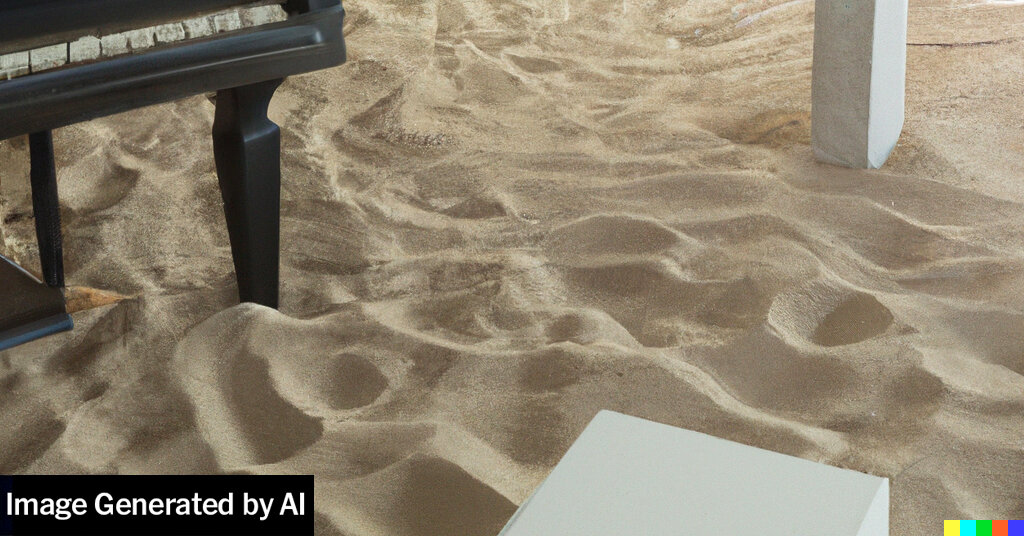

But when Mr. Nichol tweaked his requests a bit, adding or subtracting a few words here or there, it gave what he wanted. When he asked for “a piano in a living room full of sand”, the image looked more like a beach in a living room.

DALL-E is what artificial intelligence researchers call a neural network, a mathematical system loosely modeled after the network of neurons in the brain. That’s the same technology that recognizes the commands spoken in smartphones and identifies the presence of pedestrians as self-driving cars navigate city streets.

A neural network learns skills by analyzing large amounts of data. By pointing out patterns in, for example, thousands of avocado photos, he can learn to recognize an avocado. DALL-E looks for patterns as it analyzes millions of digital images, as well as text captions that describe what each image represents. In this way it learns to recognize the connections between the images and the words.

When someone describes an image for DALL-E, it generates a series of important functions that this image can contain. A feature may be the line on the rim of a trumpet. Another possibility is the curve at the top of a teddy bear’s ear.

Then a second neural network called a diffusion model creates the image and generates the pixels needed to realize these functions. The latest version of DALL-E, unveiled Wednesday with a new research paper detailing the system, generates high-resolution images that in many cases resemble photographs.

While DALL-E often doesn’t understand what someone has described and sometimes distorts the image it produces, OpenAI continues to improve the technology. Researchers can often refine the skills of a neural network by adding even greater amounts of data to it.

They can also build more powerful systems by applying the same concepts to new types of data. The Allen Institute has recently developed a system that can analyze audio as well as images and text. After analyzing millions of YouTube videos, including audio tracks and captions, it learned to identify certain moments in TV shows or movies, such as a barking dog or a slamming door.

Experts believe that researchers will continue to tighten up such systems. Ultimately, these systems can help businesses improve search engines, digital assistants, and other common technologies and automate new tasks for graphic artists, programmers, and other professionals.

But there are caveats to that potential. The AI systems may be biased against women and people of color, in part because they learn their skills from vast amounts of online text, images and other data that exhibit bias. They can be used to generate pornography, hate speech and other offensive material. And many experts believe that technology will eventually make it so easy to create disinformation that people will have to be skeptical about almost everything they see online.

“We can forge text. We can put text in someone’s voice. And we can forge images and videos,” said Dr. Etzioni. “There is already disinformation online, but the concern is that this is taking disinformation to a new level.”

OpenAI keeps DALL-E tight. It would not allow outsiders to use the system alone. It places a watermark in the corner of every image it generates. And while the lab plans to open the system to testers this week, the group will be small.

The system also includes filters that prevent users from generating inappropriate images. When asked for “a pig with the head of a sheep,” he refused to produce an image. According to the lab, the combination of the words “pig” and “head” most likely triggered OpenAI’s anti-bullying filters.

“This is not a product,” said Mira Murati, head of research at OpenAI. “The idea is to understand the possibilities and limitations and give us the opportunity to build mitigation in.”

OpenAI can control the behavior of the system in some way. But others around the world may soon develop similar technology that gives almost everyone the same powers. Boris Dayma, an independent researcher in Houston, worked from a research paper describing an early version of DALL-E and has already built and released a simpler version of the technology.

“People need to know that the images they see may not be real,” he said.