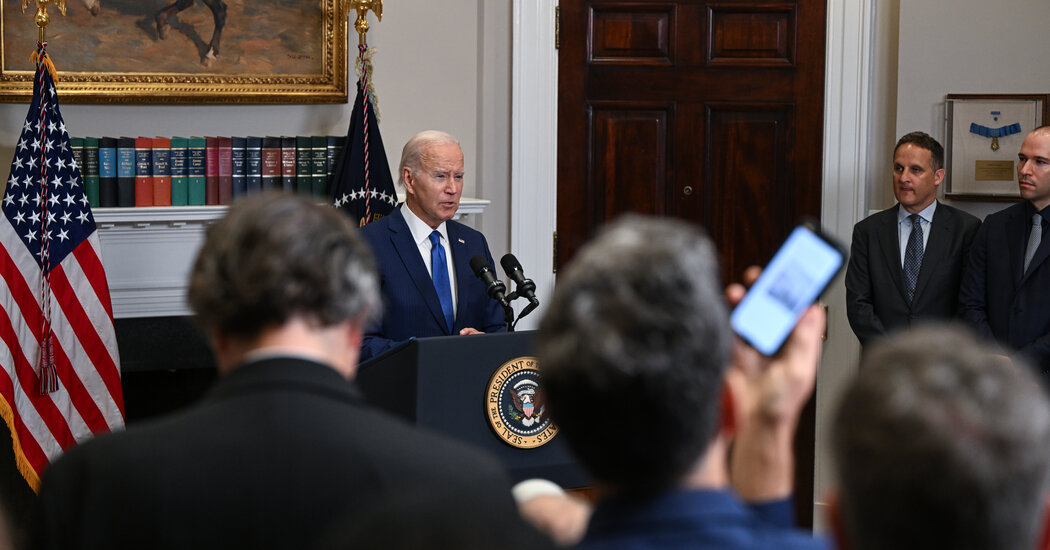

This week, the White House announced it had received “voluntary commitments” from seven leading AI companies to manage the risks of artificial intelligence.

Getting the companies – Amazon, Anthropic, Google, Inflection, Meta, Microsoft and OpenAI – to agree to something is a step forward. They include bitter rivals with subtle but important differences in how they approach AI research and development.

For example, Meta is so eager to get its AI models into the hands of developers that it has made many of them open source, making their code available to anyone. Other labs, such as Anthropic, have taken a more cautious approach and released their technology in more limited ways.

But what do these commitments actually mean? And are they likely to change much about the way AI companies operate as they are not backed by the law?

Given the potential stakes of AI regulation, the details matter. So let’s take a closer look at what has been agreed here and assess the potential impact.

Commitment 1: The companies commit to conducting internal and external security testing of their AI systems prior to release.

Each of these AI companies is already conducting security testing – what is often referred to as “red-teaming” – of their models before they are released. On the one hand, this is not really a new commitment. And it’s a vague promise. It doesn’t contain much detail about what kind of testing is required, or who will do the testing.

In a statement accompanying the pledges, the White House said only that testing of AI models “will be conducted in part by independent experts” and will focus on AI risks “such as biosecurity and cybersecurity, as well as their wider societal impacts.”

It’s a good idea to have AI companies publicly commit to continuing to do this kind of testing, and to encourage more transparency in the testing process. And there are some types of AI risks – such as the danger that AI models could be used to develop bioweapons – that government and military officials are probably better suited than companies to evaluate.

I would like to see the AI industry agree on a standard set of safety tests, such as the “autonomous replication” tests that the Alignment Research Center performs on pre-release models of OpenAI and Anthropic. I would also like to see the federal government fund this kind of testing, which can be expensive and require engineers with significant technical expertise. At this point, many safety tests are funded and controlled by the companies, which raises obvious questions of conflict of interest.

Commitment 2: The companies commit to sharing information across the industry and with governments, civil society and academia about managing AI risks.

This commitment is also a bit vague. Several of these companies are already publishing information about their AI models, usually in academic papers or corporate blog posts. A few of them, including OpenAI and Anthropic, also publish documents called “system boards,” detailing the steps they’ve taken to make those models more secure.

But they have also occasionally withheld information, citing security concerns. When OpenAI released its latest AI model, GPT-4, this year, it broke with industry habits and chose not to disclose how much data it was trained on, or how big the model was (a metric known as “parameters”). It said it declined to release this information due to competition and security concerns. It’s also the kind of data technology companies like to keep from competitors.

Will AI companies be forced to disclose that kind of information under these new commitments? What if this accelerated the AI arms race?

I suspect the White House’s goal is not so much to force companies to disclose their parameter counts, but rather to encourage them to share information with each other about the risks their models may or may not pose.

But even that kind of information sharing can be risky. If Google’s AI team prevented a new model from being used to develop a lethal bioweapon during pre-release testing, should it be sharing that information outside of Google? Would that risk bad actors getting ideas on how to get a less guarded model to do the same job?

Commitment 3: The companies commit to investing in cyber security and protections against insider threats to protect proprietary and undisclosed model weights.

This one is pretty straightforward and uncontroversial among the AI insiders I’ve talked to. “Model weights” is a technical term for the mathematical instructions that allow AI models to function. Weights are what you’d want to steal if you were an agent of a foreign government (or rival company) looking to build your own version of ChatGPT or any other AI product. And it’s something AI companies have every interest in keeping tight control.

There have already been many publicized issues with leaking model weights. For example, the weights for Meta’s original LLaMA language model were leaked on 4chan and other websites just days after the model was publicly released. Given the risks of more leaks — and the interest other countries may have in stealing this technology from U.S. companies — it feels like a good idea to ask AI companies to invest more in their own security.

Commitment 4: The companies commit to facilitating the discovery and reporting of vulnerabilities in their AI systems by third parties.

I’m not exactly sure what this means. Every AI company has discovered vulnerabilities in its models after releasing them, usually because users try to do bad things with the models or get around their guardrails (a practice known as “jailbreaking”) in ways the companies didn’t foresee.

The White House commitment calls for companies to establish a “robust reporting mechanism” for these vulnerabilities, but it’s not clear what that might mean. An in-app feedback button, similar to the one that allows Facebook and Twitter users to report posts that break rules? A bug bounty program, like the one OpenAI started this year to reward users who find bugs in its systems? Something else? We’ll have to wait for more details.

Commitment 5: The companies commit to developing robust technical mechanisms to ensure users know when AI-generated content, such as a watermarking system.

This is an interesting idea, but leaves a lot of room for interpretation. Until now, AI companies have struggled to develop tools that let people know whether they are watching AI-generated content or not. There are good technical reasons for this, but it’s a real problem if people can pass off AI-generated work as their own. (Ask any high school teacher.) And many of the tools that are currently promoted as being able to detect AI output really can’t do this with any degree of accuracy.

I am not optimistic that this problem can be solved completely. But I’m glad that companies are committing to work on it.

Commitment 6: The companies commit to publicly disclose the capabilities, limitations, and areas of appropriate and inappropriate use of their AI systems.

Another sensible sounding promise with plenty of wiggle room. How often should companies report on the capabilities and limitations of their systems? How detailed should that information be? And given that many of the companies building AI systems are surprised afterwards by the capabilities of their own systems, how well can they be expected to describe them up front?

Commitment 7: The companies commit to prioritizing research into the societal risks that AI systems can pose, including to prevent harmful bias and discrimination and to protect privacy.

Commitment to “research prioritization” is about as vague as a commitment gets. Still, I’m sure this commitment will be well received by many in the AI ethics crowd, who want AI companies to prioritize short-term harm prevention, such as bias and discrimination, over worrying about doomsday, as the AI security people do.

If you’re confused by the difference between “AI ethics” and “AI safety,” know that there are two warring factions within the AI research community, each thinking the other is focused on preventing the wrong kinds of harm.

Commitment 8: The companies commit to developing and deploying advanced AI systems to address society’s greatest challenges.

I don’t think many people would argue that advanced AI should not used to tackle society’s biggest challenges. The White House lists “cancer prevention” and “climate change mitigation” as two of the areas it would like AI companies to focus their efforts on, and it won’t get a disagreement from me on that.

What complicates this goal somewhat, however, is that in AI research, what appears frivolous often turns out to have more serious implications. Some of the technology incorporated into DeepMind’s AlphaGo — an AI system trained to play the board game Go — proved useful in predicting the three-dimensional structures of proteins, a major discovery that gave impetus to basic science research.

Overall, the White House’s deal with AI companies seems more symbolic than substantive. There is no enforcement mechanism to ensure companies honor these commitments, and many of them reflect precautions AI companies are already taking.

Still, it’s a reasonable first step. And by agreeing to follow these rules, it appears that the AI companies have learned from the failures of previous tech companies, which waited to go with the government until they got into trouble. In Washington, at least when it comes to technical regulation, it pays to show up early.