This context limit obviously limits the size of a codebase an LLM can process at once, and if you feed the AI model many huge code files (which have to be re-evaluated by the LLM every time you send another response), it can burn through token or usage limits quite quickly.

Tricks of the trade

To get around these limits, the makers of encryption agents use several tricks. For example, AI models have been refined to write code to outsource activities to other software tools. For example, they can write Python scripts to extract data from images or files instead of running the entire file through an LLM, saving tokens and avoiding inaccurate results.

Anthropic's documentation notes that Claude Code also uses this approach to perform complex data analysis across large databases, write targeted queries, and use Bash commands such as “head” and “tail” to analyze large amounts of data without ever loading the entire data objects into the context.

(In a sense, these AI agents are guided, but semi-autonomous, tool-using programs that are a significant extension of a concept we first saw in early 2023.)

Another major breakthrough in the agent space came from dynamic context management. Agents can do this in a number of ways that aren't fully revealed in proprietary coding models, but we do know the main technique they use: context compression.

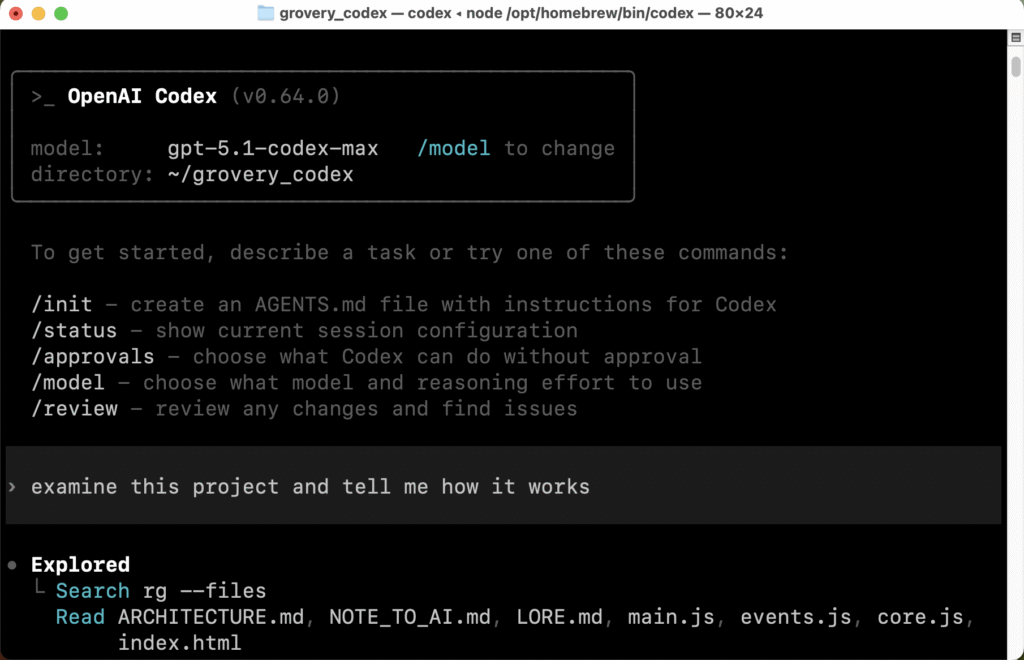

The command-line version of OpenAI Codex running in a macOS terminal window.

Credit: Benj Edwards

When an encoding LLM approaches its context limit, this technique compresses the context history by summarizing it, losing details in the process but condensing the history to important details. Anthropic's documentation describes this “densification” as distilling context content in a high-fidelity manner, preserving important details such as architectural decisions and unresolved bugs, while discarding redundant tool output.

This means that the AI coding agents periodically 'forget' much of what they are doing each time this compression occurs, but unlike older LLM-based systems they are not completely clueless as to what has happened and can quickly refocus by reading existing code, written annotations in files, change logs, and so on.