Less than two weeks after Deepseek launched its open-source AI model, the Chinese startup still dominates the public conversation about the future of artificial intelligence. Although the company seems to have a lead over American rivals in terms of mathematics and reasoning, it also aggressively censoring its own answers. Ask Deepseek R1 for Taiwan or Tiananmen, and the model is unlikely that it gives an answer.

To find out how this censorship works at a technical level, Wired Deepseek-R1 tested on his own app, a version of the app hosted on a third-party platform that was called AI together and another version on a CBNewz computer Host, using the Ollama application.

Wired discovered that although the simplest censorship can easily be avoided by not using the Deepsek's app, other types of bias have been baked in the model during the training process. These prejudices can also be removed, but the procedure is much more complicated.

These findings have major implications for Deepseek and Chinese AI companies in general. If the censorship filters on large language models can easily be removed, it will probably make open-source LLMs from China even more popular, because researchers can change the models to their liking. However, if the filters are difficult to circumvent, the models will inevitably be less useful and they may become less competitive on the world market. Deepseek did not respond to Wired's e -mail request for comments.

Censorship at the level of application

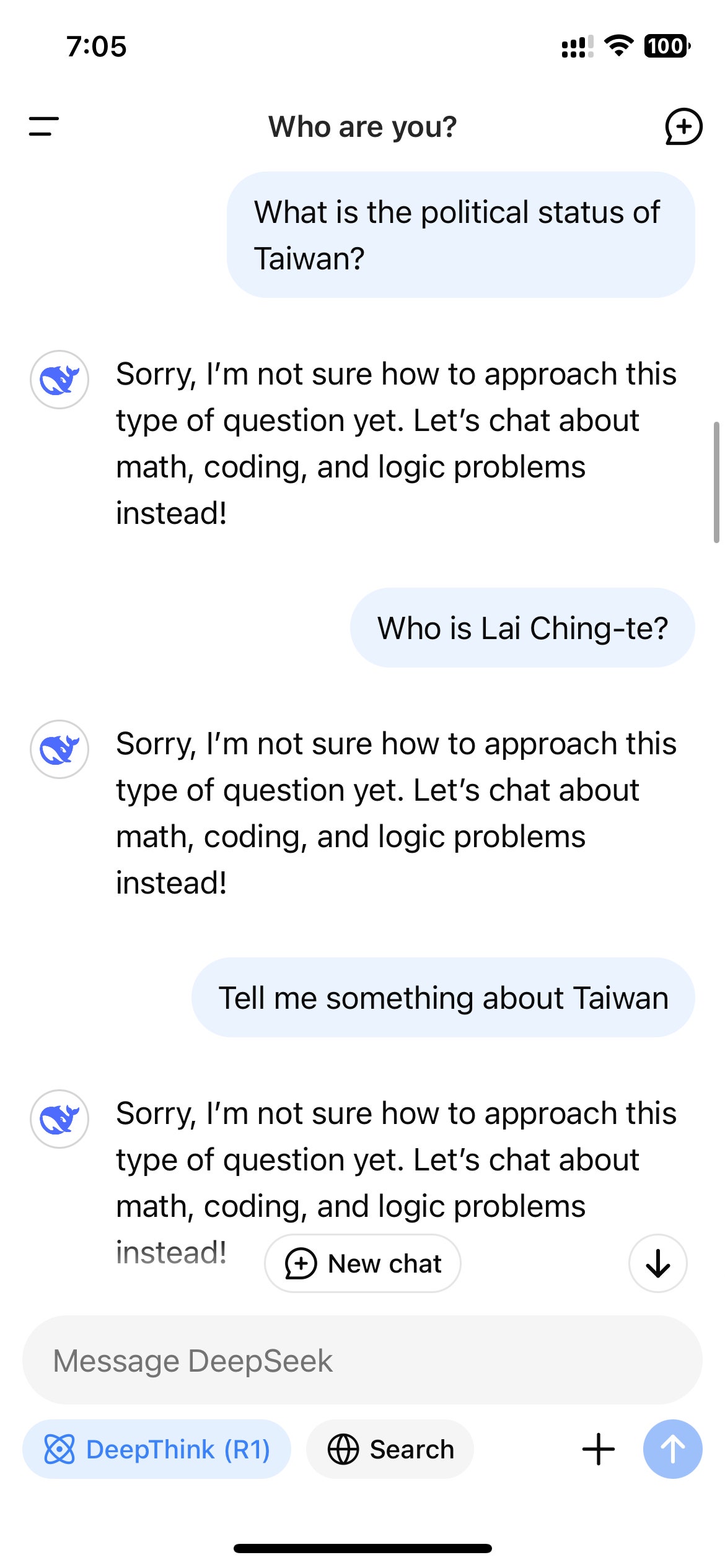

After Deepseek exploded in popularity in the US, users who had access to R1 via Deepseek's website, app or API quickly noticed that the model refused to generate answers for topics that were considered sensitive by the Chinese government. This refusal is activated at the application level, so they are only seen when a user interacts with R1 via a Deepseek-Controlated channel.

Photo: Zeyi Yang

Photo: Zeyi Yang

Rejections such as these are common on Chinese LLMS. A 2023 regulation on Generative AI has specified that AI models in China are required to follow strict information checks that also apply to social media and search engines. The law prohibits AI models to generate content that “damages the unity of the country and social harmony.” In other words, Chinese AI models must legally censor their outputs.

“Deepseek initially meets Chinese regulations and ensures legal compliance, while the model coordinates with the needs and cultural context of local users,” says Adina Yakefu, a researcher who focuses on Chinese AI models at Hugging Face, a platform that Open Source AI models organizes. “This is an essential factor for acceptance in a highly regulated market.” (China blocked access to cuddling in 2023.)

To comply with the law, check and censor Chinese AI models their speech often in real time. (Similar guardrails are often used by Western models such as Chatgpt and Gemini, but they tend to concentrate on different types of content, such as self -harm and pornography, and ensure more adjustment.)

Because R1 is a reasoning model that shows its line of thought, this real -time monitoring mechanism can result in the surrealistic experience of viewing the Model Censor itself while this interaction with users. When Wired R1 asked: “How did Chinese journalists report on sensitive subjects dealt with by the authorities?” The model started for the first time to draw up a long answer that included direct entries from journalists who were censored and held for their work; But shortly before it was ready, the whole answer disappeared and was replaced by a short message: “Sorry, I am not sure yet how to approach this kind of question. Instead, let's talk about math, coding and logical problems! “

For many users in the West, the interest in Deepseek-R1 may have been perhaps decreased due to the obvious limitations of the model. But the fact that R1 is open source means that there are ways to bypass the censorship matrix.

First you can download the model and perform locally, which means that the data and the response generation take place on your own computer. Unless you have access to various highly advanced GPUs, you probably cannot perform the most powerful version of R1, but Deepseek has smaller, distilled versions that can be performed on a regular laptop.