Aurich Lawson / Getty Images

Matrix multiplication is at the heart of many machine learning breakthroughs, and it just got even faster—twice. Last week, DeepMind announced that it had discovered a more efficient way to perform matrix multiplication, capturing a 50-year-old record. This week, two Austrian researchers from Johannes Kepler University Linz claim to have improved that new record by one step.

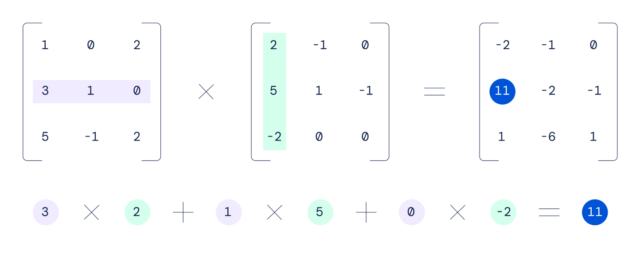

Matrix multiplication, which involves multiplying two rectangular sequences of numbers, is often found at the heart of speech recognition, image recognition, smartphone image processing, compression and computer graphics generation. Graphics processing units (GPUs) are particularly good at performing matrix multiplication due to their highly parallel nature. They can break a large matrix math problem into many pieces and attack parts of it at the same time with a special algorithm.

In 1969, a German mathematician named Volker Strassen discovered the previous best algorithm for multiplying 4×4 matrices, reducing the number of steps required to perform a matrix calculation. For example, multiplying two 4×4 matrices together using a traditional school class method would take 64 multiplications, while Strassen’s algorithm can deliver the same performance in 49 multiplications.

Deep Mind

Using a neural network called AlphaTensor, DeepMind discovered a way to reduce that number to 47 multiplications, and the researchers published a paper on the achievement in Nature last week.

Going from 49 steps to 47 steps doesn’t sound like much, but when you consider how many trillions of matrix calculations happen in a GPU every day, even incremental improvements can translate into major efficiency gains, allowing AI applications to run faster on existing hardware.

If math is just a game, AI wins

AlphaTensor is a descendant of AlphaGo (who defeated world champion) To go players in 2017) and AlphaZero, which tackled chess and shogi. DeepMind calls AlphaTensor “the first AI system for discovering new, efficient and demonstrably correct algorithms for fundamental tasks such as matrix multiplication.”

To discover more efficient matrix math algorithms, DeepMind set up the problem as a single-player game. The company wrote in more detail about the process in a blog post last week:

In this game, the board is a three-dimensional tensor (series of numbers), which indicates how far the current algorithm is incorrect. By means of a series of allowed moves, which correspond to the instructions of the algorithm, the player tries to change the tensor and set the input to zero. When the player succeeds, it results in a demonstrably correct matrix multiplication algorithm for any pair of matrices, and its efficiency is determined by the number of steps taken to zero the tensor.

DeepMind then trained AlphaTensor using reinforcement learning to play this fictional math game – similar to how AlphaGo learned to play To go– and it gradually improved over time. It eventually rediscovered Strassen’s work and that of other human mathematicians, and surpassed them, according to DeepMind.

In a more complicated example, AlphaTensor discovered a new way to perform 5×5 matrix multiplication in 96 steps (versus 98 for the older method). This week Manuel Kauers and Jakob Moosbauer from Johannes Kepler University in Linz, Austria, has published a paper claiming to have reduced that number by one, to 95 multiplications. It’s no coincidence that this seemingly record-breaking new algorithm came so quickly because it built on DeepMind’s work. In their paper, Kauers and Moosbauer write: “This solution is obtained from the scheme of [DeepMind’s researchers] by applying a series of transformations leading to a scheme from which one multiplication can be eliminated.”

Technical progress is building, and with AI looking for new algorithms, it’s possible other longstanding math records could fall soon. Just as computer-aided design (CAD) enabled the development of more complex and faster computers, AI can help human engineers accelerate their own deployment.