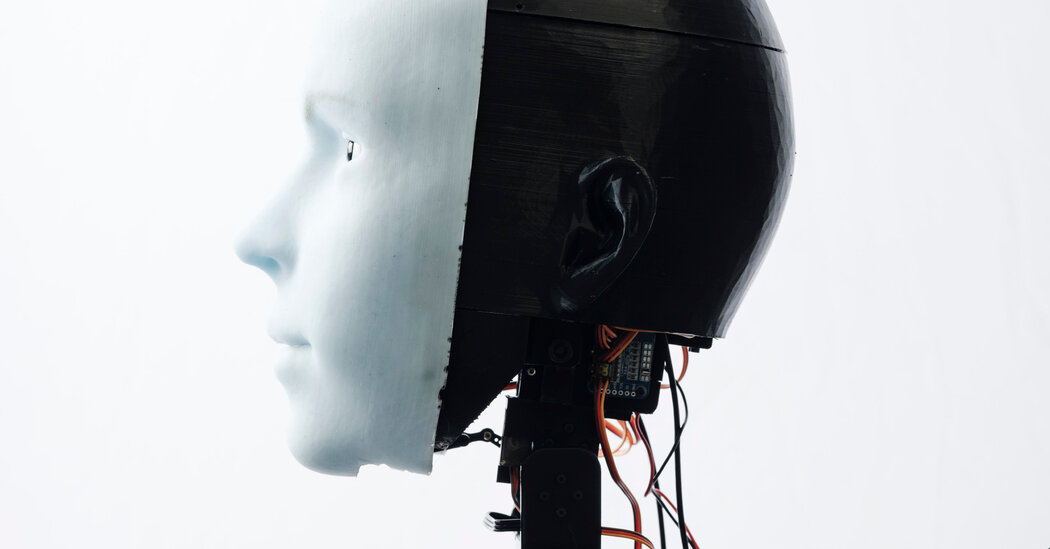

However, was it really conscious?

The risk of entering into a theory of consciousness is that it opens up the possibility of criticism. Sure, self-awareness seems important, but aren’t there other important features of consciousness? Can we call something conscious if it doesn’t feel conscious to us?

Dr. Chella believes that consciousness cannot exist without language, and has developed robots that can form internal monologues, reason for themselves, and think about the things they see around them. One of his robots was recently able to recognize itself in a mirror, which is probably the most famous test of animal self-awareness.

Joshua Bongard, a roboticist at the University of Vermont and a former member of the Creative Machines Lab, believes that consciousness is not just cognition and mental activity, but has an intrinsic physical aspect. He has developed creatures called xenobots that are made entirely of frog cells linked together so that a programmer can control them like machines. According to dr. Bongard, humans and animals haven’t just evolved to adapt to and interact with their environment; our tissues evolved to serve these functions, and our cells evolved to serve our tissues. “What we are is intelligent machines made of intelligent machines made of intelligent machines, all the way down,” he said.

This summer, around the same time Dr. Lipson and Dr. Chen released their latest robot, a Google engineer claimed that the company’s recently improved chatbot, called LaMDA, was conscious and deserved to be treated like a small child. This claim was met with skepticism, especially since, as Dr. Lipson noted, the chatbot was processing “a code written to complete a task”. There was no underlying structure of consciousness, other researchers said, just the illusion of consciousness. Dr. Lipson added: “The robot was not self-aware. It’s a bit like cheating.”

But with so much disagreement, who’s to say what counts as cheating?

🦾🦾🦾

Eric Schwitzgebel, a philosophy professor at the University of California, Riverside who has written about artificial consciousness, said the problem with this general uncertainty was that, at the rate things progress, humanity would likely develop a robot that many people think that he is conscious before we agree on the criteria of consciousness. If that happens, should the robot get rights? Freedom? Should it be programmed to feel happiness when it serves us? Can it speak for itself? Vote?

(Such questions have fueled an entire subgenre of science fiction in books by authors like Isaac Asimov and Kazuo Ishiguro and on television shows like Westworld and Black Mirror.)