“It always surprises me that in the physical world there are really strict guidelines when we release products,” says Farid. “You can’t put a product on the market and hope it won’t kill your customer. But with software, we’re like, ‘This doesn’t really work, but let’s see what happens when we release it to billions of people.’”

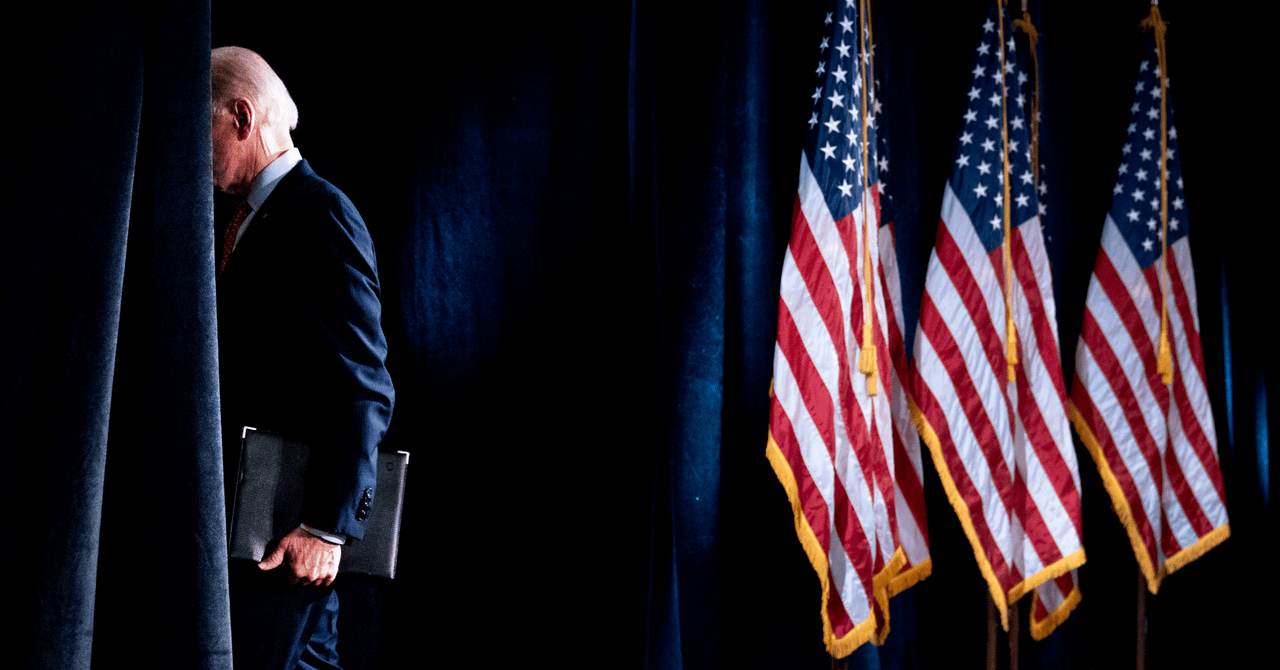

When we start to see a significant amount of deepfakes spread during the election, it’s easy to imagine someone like Donald Trump sharing this kind of content on social media and claiming it’s real. A deepfake of President Biden saying something disqualifying could come out shortly before the election, and many people may never find out it was AI-generated. After all, research has consistently shown that fake news spreads further than real news.

Even if deepfakes don’t become ubiquitous before the 2024 election, which is still 18 months away, the mere fact that this kind of content can be created could influence the election. Knowing that fraudulent images, audio, and video can be created with relative ease can lead people to mistrust the legitimate material they come across.

“In some ways, deepfakes and generative AI don’t even have to be involved in the election to cause disruption, because now the source is poisoned with the idea that anything can be fake,” says Ajder. ‘That’s a very handy excuse if something nasty comes out in which you can be seen. You can dismiss it as fake.”

So what can be done about this problem? One solution is something like C2PA. This technology cryptographically signs any content created by a device, such as a phone or video camera, and documents who took the image, where and when. The cryptographic signature is then kept on a centralized immutable ledger. This allows people making legitimate videos to show that they are, in fact, legitimate.

Some other options are so-called fingerprinting and watermarking of images and videos. Fingerprinting involves extracting so-called “hashes” from the content, which are essentially just strings of data so that it can later be verified as legitimate. Watermarking, as you would expect, involves inserting a digital watermark on images and videos.

It is often proposed to develop AI tools to detect deepfakes, but Ajder is not sold on that solution. He says the technology isn’t reliable enough and it won’t be able to keep up with the ever-changing generative AI tools being developed.

A final option to solve this problem is to develop a kind of instant fact-checker for social media users. Aviv Ovadya, a researcher at Harvard’s Berkman Klein Center for Internet & Society, says you can highlight a piece of content in an app and send it to a contextualization engine that informs you of its veracity.

“Media literacy evolving with the pace of advancement in this technology is not easy. You need it to be almost immediate — where you look at something you see online and get your context on that thing,” says Ovadya. “What are you looking at? You could have it reference sources you can trust .

If you see something that might be fake news, the tool can quickly inform you about its veracity. If you see an image or video that seems fake, it can check sources to see if it’s verified. Ovadya says it could be available in apps like WhatsApp and Twitter, or could just be its own app. The problem, he says, is that many of the founders he’s talked to simply don’t see a lot of money in developing such a tool.

Whether any of these potential solutions will be adopted before the 2024 election remains to be seen, but the threat is growing and a lot of money is going into developing generative AI and little into ways to prevent the spread of this kind of disinformation.

“I think we’re going to see a deluge of tools, as we’re already seeing, but I think [AI-generated political content] will continue,” says Ajder. “Essentially, we’re not in a good position to deal with these incredibly fast-moving, powerful technologies.”