Even when these AI search tools mentioned sources, they often sent users to syndicated versions of content on platforms such as Yahoo News instead of original publishing sites. This even happened in cases where publishers had formal license agreements with AI companies.

Url manufacturing emerged as another important problem. More than half of the quotes from Google's Gemini and Grok 3 LED users to manufactured or broken URLs resulting in error pages. Of the 200 quotes tested from Grok 3, 154 resulted in broken links.

These issues create considerable tension for publishers, who are confronted with difficult choices. Blocking AI crawlers can lead entirely to loss of attribution, while allowing it makes widespread reuse possible without bringing traffic back to the publishers' own websites.

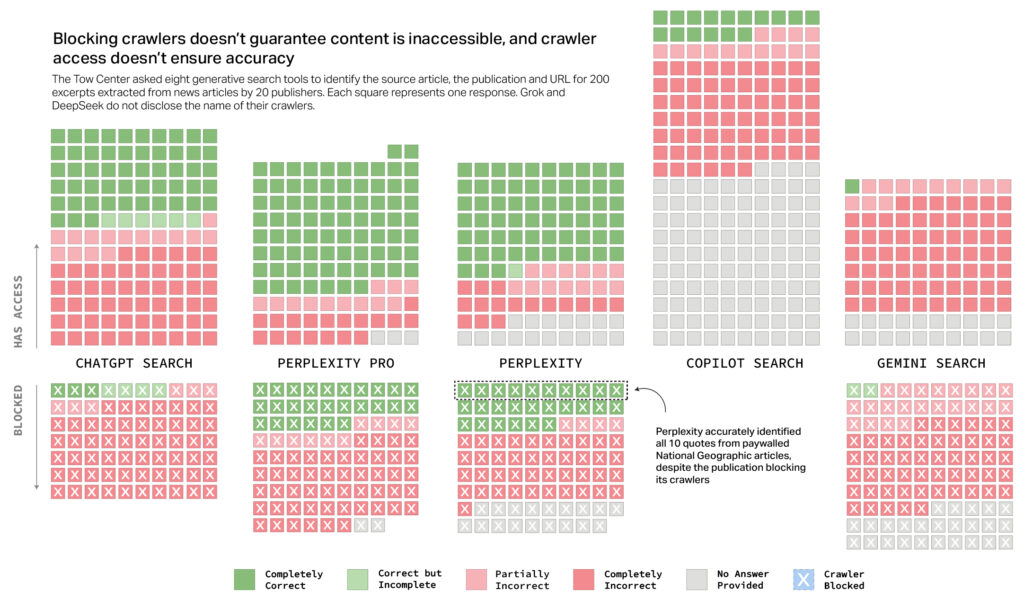

A CJR graph shows that blocking crawlers does not mean that AI search providers submit the request.

Credit: CJR

Mark Howard, Chief Operating Officer at Time Magazine, expressed his concern for CJR about guaranteeing transparency and control over how the content of the time appears via searches generated by AI. Despite these issues, Howard sees room for improvement in future iterations, which says: “Today is the worst that the product will ever be”, referring to substantial investments and technical efforts aimed at improving these tools.

Howard, however, also did a shame, which suggests that it is the user's fault if they are not skeptical compared to the accuracy of free AI tools: “If someone as a consumer now believes that one of these free products will be 100 percent accurate, they are embarrassed.”

OpenAi and Microsoft have given statements to CJR who confirmed the receipt of the findings, but did not immediately tackle the specific problems. OpenAi noted his promise to support publishers by driving traffic through summaries, quotes, clear links and attributing. Microsoft stated that it adheres to robot exclusion protocols and guidelines for publishers.

The latest report builds on earlier findings published in November 2024 by the TOW Center, which identified similar accuracy problems in the way Chatgpt deals with news -related content. View the Columbia Journalism Review website for more information about the reasonably exhausting report.