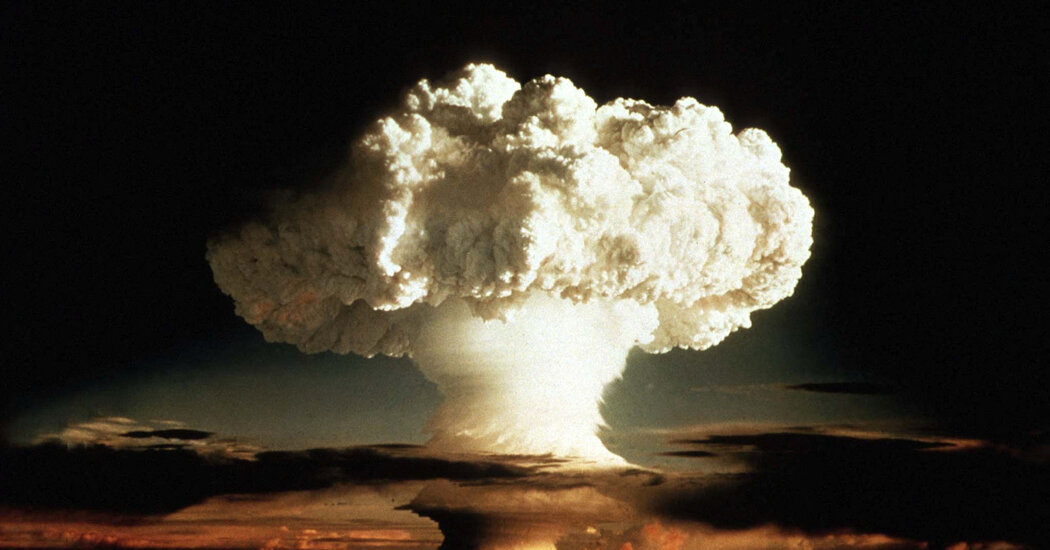

The comparison seems to be everywhere these days. “It’s like nuclear weapons,” said a pioneering artificial intelligence researcher. Top AI executives have compared their product to nuclear energy. And a group of industry leaders warned last week that AI technology could pose an existential threat to humanity, akin to nuclear war.

Human analogs AI advance for years splitting the atom. But the comparison has become sharper with the introduction of AI chatbots and AI makers’ calls for national and international regulation — much as scientists in the 1950s called for guardrails for nuclear weapons. Some experts worry that AI will soon cut jobs or spread disinformation; others fear that hyperintelligent systems would eventually learn to write their own computer code, slip the bonds of human control, and perhaps decide to wipe us out. “The makers of this technology tell us they’re concerned,” said Rachel Bronson, the president of the Bulletin of the Atomic Scientists, which tracks man-made threats to civilization. “The creators of this technology are asking for governance and regulation. The makers of this technology are telling us to pay attention.”

Not every expert finds the comparison correct. Some note that the destructiveness of atomic energy is kinetic and demonstrated, while AI’s danger to humanity remains highly speculative. Others argue that almost every technology, including AI and nuclear energy, has benefits and risks. “Tell me a technology that can’t be used for anything evil, and I’ll tell you a completely useless technology that can’t be used for anything,” says Julian Togelius, a computer scientist at NYU who works with AI.

But the comparisons have become fast and frequent enough that it can be difficult to know whether doomsayers and defenders are talking about AI or nuclear technology. Take the quiz below to see if you can tell the difference.

Can you tell me what topic these quotes are about?

The quotes above are only part of the responses to – and the debate about – AI and nuclear technology. They record parallels, but also some notable differences: the fear of imminent, fiery destruction by atomic weapons; or how advancements in AI are currently mostly the work of private companies rather than governments.

But in both cases, some of the same people who brought the technology into the world are the loudest. “It’s about managing the risks of advancing science,” Ms. Bronson, the president of Bulletin of the Atomic Scientists, said of AI. . And you don’t have to equate them to learn from it.”