Think of the words swirling around in your head: that tasteless joke you wisely kept to yourself over dinner; your unspoken impression of your best friend’s new partner. Now imagine if someone could listen in.

On Monday, scientists at the University of Texas, Austin, took another step in that direction. In a study published in the journal Nature Neuroscience, the researchers describe an AI that can translate the private thoughts of human subjects by analyzing fMRI scans, which measure blood flow to different brain regions.

Researchers have already developed language decoding methods to capture speech attempts by people who can no longer speak, and to allow paralyzed people to write while thinking only about writing. But the new language decoder is one of the first to not rely on implants. In the study, it was able to convert a person’s imagined speech into actual speech and, when subjects were shown silent movies, it was able to generate relatively accurate descriptions of what was happening on screen.

“This isn’t just a language stimulus,” said Alexander Huth, a neuroscientist at the university who helped lead the study. “We get a meaning, something about the idea of what is happening. And that it is possible is very exciting.”

The study focused on three participants, who visited Dr. Huth came to listen to “The Moth” and other narrative podcasts. As they listened, an fMRI scanner recorded blood oxygenation levels in parts of their brains. Next, the researchers used a large language model to match brain activity patterns to the words and sentences the participants had heard.

Large language models such as OpenAI’s GPT-4 and Google’s Bard are trained on massive amounts of writing to predict the next word in a phrase or sentence. In addition, the models make maps that indicate how words relate to each other. A few years ago, Dr Huth noted that certain parts of these maps – so-called context embeddings, which capture the semantic features or meanings of sentences – can be used to predict how the brain lights up in response to language.

According to Shinji Nishimoto, a neuroscientist at Osaka University who was not involved in the study, “brain activity is a kind of scrambled signal, and language models provide ways to decipher it.”

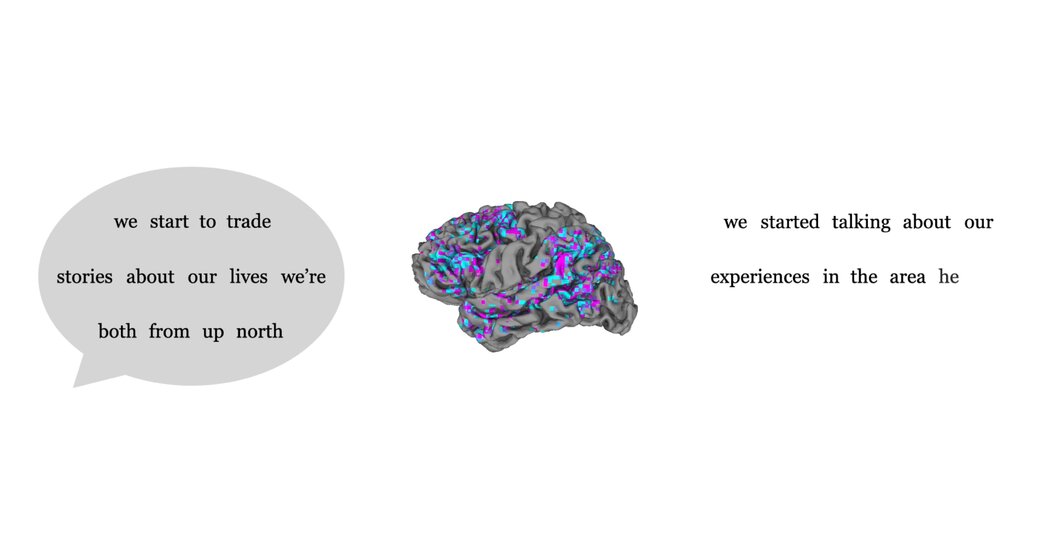

In their study, Dr. Huth and colleagues effectively reversed the process by using a different AI to translate the participant’s fMRI images into words and sentences. The researchers tested the decoder by having the participants listen to new recordings and then see how well the translation matched the actual transcript.

Almost every word was out of place in the decoded script, but the meaning of the passage was regularly preserved. Essentially, the decoders were paraphrasing.

Original transcript: “I got up from the air mattress and pressed my face against the glass of the bedroom window expecting to see eyes staring back at me, but instead all I found was darkness.”

Decoded from brain activity: “I just walked over to the window and opened the glass. I stood on tiptoe and peered out. I saw nothing and looked up again. I saw nothing.”

During the fMRI scan, participants were also asked to silently imagine telling a story; then they repeated the story aloud for reference. Here, too, the decoding model captured the gist of the unspoken version.

Version of the participant: “Look for a message from my wife that she had changed her mind and that she would be back.”

Decrypted versionTo see her for some reason, I thought she would come up to me and say she misses me.

Finally, the subjects watched a short, silent animation film, again while undergoing an fMRI scan. By analyzing their brain activity, the language model was able to decipher a rough summary of what they were viewing – perhaps their internal description of what they were viewing.

The result suggests that the AI decoder not only captured words, but also meaning. “Language perception is an externally driven process, while imagination is an active internal process,” said Dr. Nishimoto. “And the authors showed that the brain uses common representations for these processes.”

Greta Tuckute, a neuroscientist at the Massachusetts Institute of Technology who was not involved in the study, said this was “the high-level question.”

“Can we decipher the meaning of the brain?” she continued. “In some ways they show that, yes, we can.”

This language decoding method had limitations, Dr. Huth and his colleagues. First, fMRI scanners are bulky and expensive. In addition, training the model is a long, tedious process, and to be effective it must be done on individuals. When the researchers tried to use a decoder trained on one person to read another’s brain activity, it failed, suggesting that each brain has unique ways of displaying meaning.

Participants could also shield their internal monologues, throw off the decoder by thinking about other things. AI may be able to read our minds, but for now it will have to read them one by one, and with our permission.