It’s been six decades since Ivan Sutherland created Sketchpad, a software system that predicted the future of interactive and graphic computing. In the 1970s, he played a role in bringing the computer industry together to build a new type of microchip with hundreds of thousands of circuits that would become the foundation of today’s semiconductor industry.

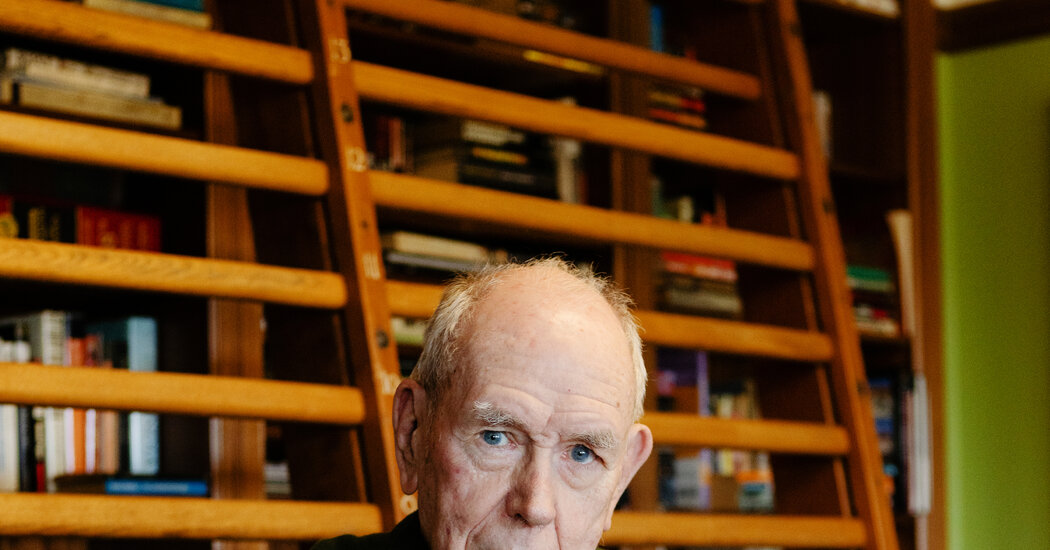

Dr. Sutherland, who is 84, believes the United States is failing at a critical time to consider alternative chip-making technologies that would allow the country to lead the way in building the most advanced computers.

By relying on supercooled electronic circuits that switch without electrical resistance and therefore generate no excess heat at higher speeds, computer designers will be able to overcome the biggest technological barrier for faster machines, he claims.

“The nation that best seizes the opportunity of superconducting digital circuits will enjoy computer superiority for decades to come,” he and a colleague recently wrote in an essay circulated among technologists and government officials.

The insights of Dr. Sutherland are important, in part because decades ago he was instrumental in helping create the current dominant approach to making computer chips.

In the 1970s, Dr. Sutherland, who was chairman of the computer science division at the California Institute of Technology, and his brother Bert Sutherland, then a research manager at a division of Xerox called the Palo Alto Research Center, computer scientist Lynn Conway to physicist Carver Mead.

They pioneered a design based on a type of transistor known as complementary metal-oxide-semiconductor or CMOS, which was invented in the United States. It made it possible to manufacture the microchips used by personal computers, video games, and the wide variety of business, consumer, and military products.

Now argues Dr. Sutherland that an alternative technology that predates CMOS and has had many false starts should be reconsidered. Superconducting electronics were developed at the Massachusetts Institute of Technology in the 1950s and then pursued by IBM in the 1970s before being largely abandoned. At one point, it even made a strange international detour before returning to the United States.

In 1987, Mikhail Gorbachev, the last Soviet leader, read an article in the Russian newspaper Pravda describing an astonishing advancement in low-temperature computing from Fujitsu, the Japanese microelectronics giant.

The global race for computer chips

Mr. Gorbachev was intrigued. Wasn’t this an area, he wanted to know, where the Soviet Union could excel? Konstantin Likharev, a young associate professor of physics at Moscow State University, was eventually tasked with giving the Soviet Politburo a five-minute briefing.

When he read the article, Dr. However, Likharev believed that the Pravda reporter misread the press release, claiming Fujitsu’s superconducting memory chip was five orders of magnitude faster than it was.

Dr. Likharev explained the error, but he noted that the field was still promising.

That set off a series of events that led to Dr. Likharev received several million dollars in research support, which enabled him to build a small team of researchers and eventually, after the fall of the Berlin Wall, move to the United States. Dr. Likharev took a job in physics at New York’s Stony Brook University and helped create Hypres, a digital superconductor company that still exists today.

The story could have ended there. But it seems that the elusive technology is gaining momentum again as the cost of making modern chips has become immense. A new semiconductor plant costs $10 billion to $20 billion and takes up to five years to complete.

Dr. Sutherland argues that rather than pushing for more expensive technology that is increasingly less efficient, the United States should consider training a generation of young engineers capable of thinking outside the box.

Superconductor-based computing systems, where electrical resistance drops to zero in the switches and wires, could solve the cooling challenge that increasingly threatens the world’s data centers.

CMOS chip making is dominated by Taiwanese and South Korean companies. The United States now plans to spend nearly a third of a trillion dollars in private and public money in an effort to rebuild the country’s chip industry and regain its global dominance.

Dr. Sutherland is joined by others in the industry who believe CMOS manufacturing is reaching fundamental limits that will make the cost of progress unbearable.

“I think we can say with some certainty that we’re going to have to radically change the way we design computers because we’re really approaching the limits of what’s possible with our current silicon-based technology,” said Jonathan Koomey, a specialist in the energy needs of large-scale computers.

As the size of transistors has shrunk to just hundreds or thousands of atoms, the semiconductor industry is increasingly beset by a variety of technical challenges.

Modern microprocessor chips also suffer from what engineers describe as “dark silicon.” If all the billions of transistors on a modern microprocessor chip are used at the same time, the heat they produce will melt the chip. Consequently, entire sections of modern chips are turned off and only a few transistors are working at any given time, making them much less efficient.

Dr. Sutherland said the United States should consider alternative technologies for reasons of national security. The benefits of a superconducting computer technology could be useful first in the highly competitive market for mobile base stations, the specialized computers in cell phone towers that process wireless signals, he suggested. China has become a dominant force in the market for current 5G technology, but next-generation 6G chips would benefit from both the extreme speed and significantly lower power requirements of superconducting processors, he said.

Other industry executives agree. “Ivan is right that the power problem is the big problem,” said John L. Hennessy, an electrical engineer who is chairman of Alphabet and a former president of Stanford. He said there were only two ways to solve the problem: either by gaining efficiencies with a new design, which is unlikely for general-purpose computers, or by creating a new technology that is not bound by existing rules.

One such opportunity could be to create new computer designs that mimic the human brain, which is a marvel of low-power computer efficiency. Artificial intelligence research in a field known as neuromorphic computing has previously used conventional silicon manufacturing.

“There really is the potential to create the equivalent of the human brain using superconducting technology,” said Elie Track, chief technology officer of Hypres, the superconducting company. Compared to quantum computing technology, which is still in an early experimental stage, “this is something that can be done now, but unfortunately the funding agencies haven’t been paying attention to it,” he said.

The time for superconducting computers may not have come yet, in part because every time the CMOS world is about to hit a final obstacle, clever engineering has overcome it.

In 2019, a team of researchers at MIT led by Max Shulaker announced that it had built a microprocessor out of carbon nanotubes that promised 10 times the energy efficiency of current silicon chips. Dr. Shulaker has teamed up with Analog Devices, a semiconductor manufacturer in Wilmington, Massachusetts, to bring a hybrid version of the technology to market.

“Increasingly, I believe you can’t beat silicon,” he said. “It’s a moving target and it’s really good at what it does.”

But as silicon approaches atomic limits, alternative approaches again seem promising. Mark Horowitz, a Stanford computer scientist who helped set up several companies in Silicon Valley, said that Dr. Sutherland’s passion for superconducting electronics.

“People who changed the course of history are always a little crazy, you know, but sometimes they’re crazy too,” he said.