On Tuesday, Meta AI announced the development of Cicero, which it claims is the first AI to achieve human-level feats in the strategy board game Diplomacy. It’s a remarkable achievement because the game requires deep interpersonal negotiation skills, meaning that Cicero has acquired some mastery of the language necessary to win the game.

Even before Deep Blue beat Garry Kasparov at chess in 1997, board games were a useful measure of AI performance. In 2015, another barrier fell when AlphaGo defeated Go master Lee Sedol. Both games follow a relatively clear set of analytic rules (although Go’s rules are generally simplified for computer AI).

But with Diplomacy, much of the gameplay involves social skills. Players must show empathy, use natural language and build relationships to win – a difficult task for a computer player. With this in mind, Meta asked, “Can we build more effective and flexible agents that can use language to negotiate, persuade, and collaborate with people to achieve strategic goals, similar to the way humans do?”

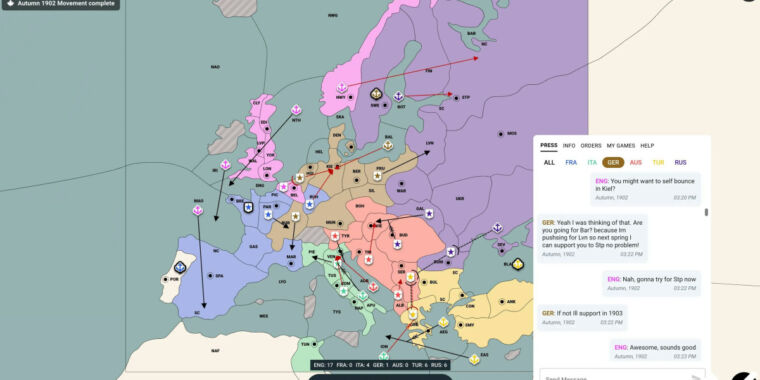

According to Meta, the answer is yes. Cicero learned his skills by playing an online version of it Diplomacy at webDiplomacy.net. Over time, it became a master of the game, reportedly achieving “more than double the average score” of human players and being among the top 10 percent of people who played more than one game.

To create Cicero, Meta brought together AI models for strategic reasoning (similar to AlphaGo) and natural language processing (similar to GPT-3) and merged them into one agent. Cicero looks at the state of the game board and conversation history during each game and predicts how other players will act. It creates a plan that it executes through a language model that can generate human-like dialogue, allowing it to coordinate with other players.

Meta AI

Meta calls Cicero’s natural language skills a “manageable model of dialogue,” which is where the core of Cicero’s personality lies. Like GPT-3, Cicero extracts text from a large corpus of internet scraped from the internet. “To build a verifiable dialogue model, we started with a BART-like language model with 2.7 billion parameters, pre-trained on text from the Internet, and refined on over 40,000 human games on webDiplomacy.net,” writes Meta.

The resulting model mastered the intricacies of a complex game. “For example, Cicero can deduce that it needs the support of a particular player later in the game,” says Meta, “and then devise a strategy to win that person’s favor — even recognizing the risks and opportunities that player sees . from their own point of view.”

Meta’s Cicero research appeared in the journal Science under the title “Human-level play in the game of Diplomacy by combining language models with strategic reasoning.”

In terms of wider applications, Meta suggests her Cicero research could reduce “communication barriers” between humans and AI, such as maintaining a lengthy conversation to teach someone a new skill. Or it could power a video game where NPCs can talk just like humans, understand the player’s motivations and adapt as they go.

At the same time, this technology can be used to manipulate people by impersonating humans and deceiving them in potentially dangerous ways, depending on the context. In that sense, Meta hopes other researchers can build on its code “responsibly” and says it has taken steps to detect and remove “toxic messages in this new domain,” likely referring to dialogues learned by Cicero of internet texts swallowed it – always a risk for large language models.

Meta provided a detailed site to explain how Cicero works and has also made Cicero’s code open source on GitHub. Online Diplomacy fans – and maybe even the rest of us – might have to watch out.