The Spanish government this week announced a major overhaul of a program where police rely on an algorithm to identify potential repeat victims of domestic violence, after officials faced questions about the system's effectiveness.

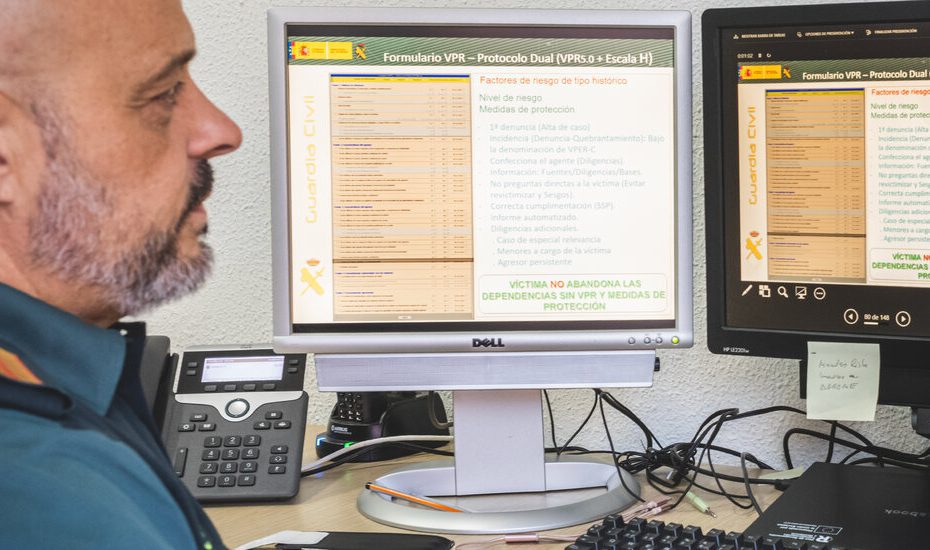

The VioGén program requires police officers to ask a victim a series of questions. The answers are entered into a software program that produces a score – from no risk to extreme risk – intended to highlight the women most vulnerable to repeated abuse. The score helps determine what police protection and other services a woman can receive.

A New York Times investigation last year found that police relied heavily on the technology and almost always accepted the decisions made by the VioGén software. Some women who the algorithm labeled as at no risk or low risk of more harm later suffered further abuse, including dozens who were murdered, The Times found.

Spanish officials said the changes announced this week were part of a long-planned update to the system, which was introduced in 2007. They said the software had helped police forces with limited resources protect vulnerable women and reduce the number of repeat attacks.

In the updated system, VioGén 2, the software will no longer be able to label women as not at risk. Police also have to enter more information about a victim, which officials say would lead to more accurate predictions.

Other changes aim to improve cooperation between government agencies involved in cases of violence against women, including by making it easier to share information. In some cases, victims receive personalized protection plans.

“Machismo is knocking at our doors and doing so with a violence we have not seen in a long time,” Ana Redondo, the Equality Minister, said at a press conference on Wednesday. “It's not the time to take a step back. It's time to take a leap forward.”

Spain's use of an algorithm to guide treatment of gender violence is a far-reaching example of how governments are turning to algorithms to make important societal decisions, a trend that is expected to increase with the use of artificial intelligence. The system has been explored as a potential model for governments elsewhere trying to combat violence against women.

VioGén was created with the belief that an algorithm based on a mathematical model can serve as an unbiased tool to help police find and protect women who might otherwise be missed. The yes-or-no questions included: Was a weapon used? Were there economic problems? Does the aggressor display controlling behavior?

Victims classified as higher risk were given more protection, including regular patrols of their homes, access to a shelter and police surveillance of their abuser's movements. Those with lower scores received less help.

As of November, Spain had more than 100,000 active cases of women evaluated by VioGén, with about 85 percent of victims at little risk of being hurt again by their abuser. Police officers in Spain are trained to override VioGén's recommendations if the evidence warrants it, but The Times found the risk scores were accepted about 95 percent of the time.

Victoria Rosell, a judge in Spain and former government deputy who worked on gender violence, said a period of “self-criticism” was necessary before the government could improve VioGén. She said the system could be more accurate if it pulled information from additional government databases, including health and education systems.

Natalia Morlas, president of Somos Más, a victims' rights group, said she welcomed the changes, which she hoped would lead to better risk assessments by police.

“Properly assessing the risk to the victim is so important that it can save lives,” Ms Morlas said. She added that it was crucial to maintain close human oversight of the system because a victim “should be treated by humans, not machines.”