Countries including the US specify the need for human operators to “exercise appropriate levels of human judgment in the use of force” when operating autonomous weapons systems. In some cases, operators can visually verify targets before authorizing attacks and can 'wave off' attacks if the situation changes.

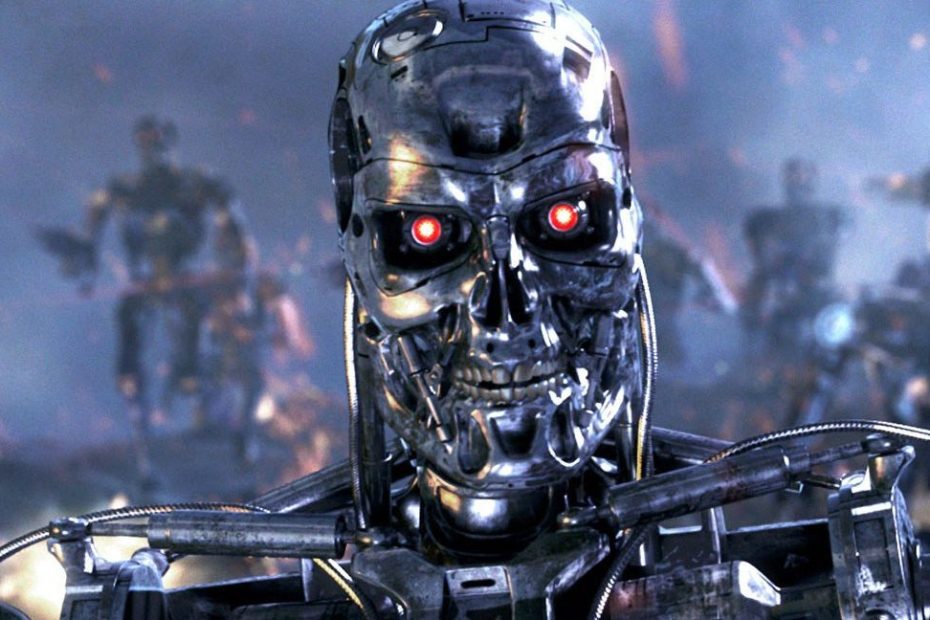

AI is already being used to support military targets. Some even argue that it is a responsible use of the technology because it could reduce collateral damage. This idea evokes the reversal of Schwarzenegger's role as the benevolent “machine guardian” in the original film's sequel, Terminator 2: Judgment Day.

However, AI could also undermine the role that human drone operators play in challenging machine recommendations. Some researchers think that people tend to trust what computers say.

“Hanging ammunition”

Militaries involved in conflict are increasingly using small, cheap drones that can detect and collide with targets. These 'loitering munitions' (so called because they are designed to hover over a battlefield) have varying degrees of autonomy.

As I have argued in research co-authored with security researcher Ingvild Bode, the dynamics of the war in Ukraine and other recent conflicts in which these munitions have been used extensively raise concerns about the quality of control exercised by human operators.

Ground-based military robots, armed with weapons and designed for use on the battlefield, may be reminiscent of the ruthless Terminators, and armed aerial drones may, over time, come to resemble the franchise's 'hunter-killers'. But these technologies don't hate us like Skynet does, nor are they “super intelligent.”

However, it is critical that human operators continue to exercise choice and meaningful control over machine systems.

Undoubtedly, The terminatorThe company's greatest legacy is reshaping the way we collectively think and speak about AI. This matters now more than ever because of the central role these technologies have played in the strategic struggle for global power and influence between the US, China and Russia.

The entire international community, from superpowers like China and the US to smaller countries, must find the political will to work together – and to address the ethical and legal challenges posed by the military applications of AI in this time of geopolitical turmoil bring. How countries deal with these challenges will determine whether we can avoid the dystopian future we so vividly imagine The terminator– even if we won't see time-traveling cyborgs anytime soon.![]()

Tom FA Watts, Postdoctoral Fellow, Department of Politics, International Relations and Philosophy, Royal Holloway University of London. This article is republished from The Conversation under a Creative Commons license. Read the original article.