Brenda Ahearn, Michigan Tech

Roombas can be both convenient and fun, especially for cats, who like to ride atop the machines during their cleaning rounds. But the obstacle-avoiding cameras collect footage of their surroundings, sometimes quite personal footage, as was the case in 2020 when footage of a young woman on the toilet, captured by a Roomba, leaked to social media after being uploaded to a cloud server. It’s a thorny problem in our highly online, digital age, where internet-connected cameras are being used in a variety of home-monitoring and health applications, as well as more public uses like autonomous vehicles and security cameras.

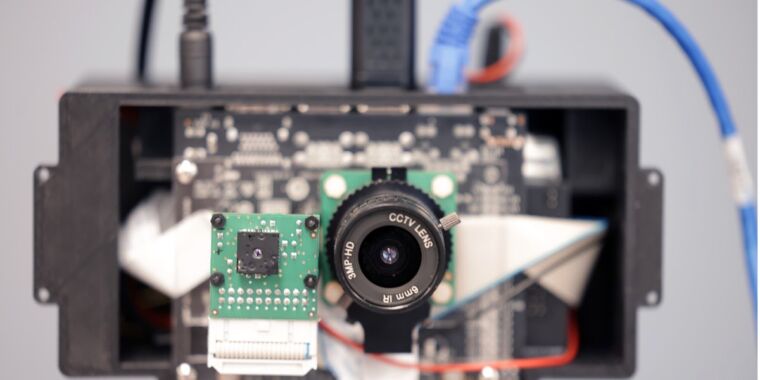

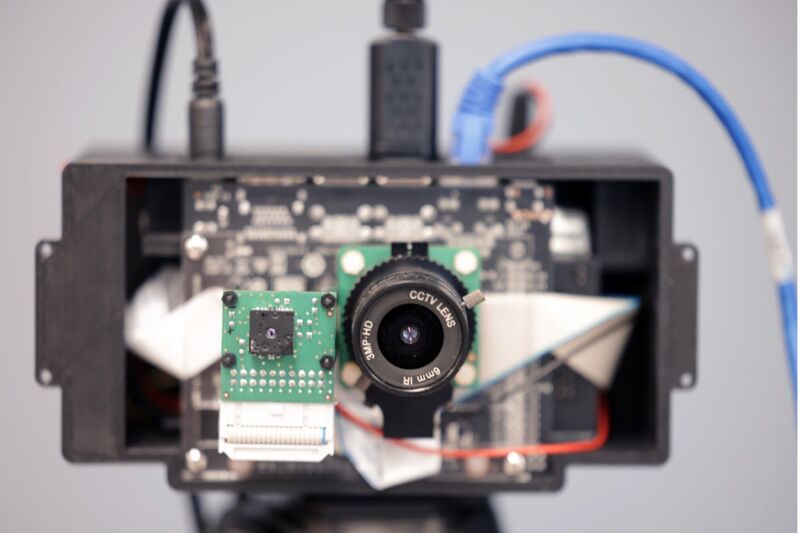

Engineers at the University of Michigan (UM) have developed a potential solution: PrivacyLens, a new camera that can detect people in images based on body temperature and replace their likeness with a generic stick figure. They have filed a provisional patent for the device, described in a recent paper published in the Proceedings on Privacy Enhancing Technologies Symposium, held last month.

“Most consumers don’t think about what happens to the data collected by their favorite smart home devices. In most cases, raw audio, images, and video from these devices is streamed to manufacturers’ cloud-based servers, regardless of whether the data is actually needed by the end application,” said co-author Alanson Sample. “A smart device that removes personally identifiable information (PII) before sending sensitive data to private servers will be a much more secure product than what we have today.”

The authors identified three different types of security threats associated with such devices. The Roomba incident is an example of excessive data collection, beyond what the user has consciously consented to, along with authorized access and unauthorized sharing. (Gig workers in Venezuela tasked with labeling the data to train AI posted the revealing images to online forums.) A remote hacker constitutes unauthorized access.

Smart doorbells, for example, have “encrypted” camera feeds, and users may think their privacy is safe. But such feeds can still be accessed by employees of the device manufacturer, data brokers, third parties or law enforcement agencies, as well as hackers. When CCTV cameras at a Massachusetts subway entrance captured a woman falling from an escalator in 2012, someone at the Massachusetts Bay Transportation Authority inexplicably shared the video footage with the press and on YouTube. It was quickly taken down, but not before multiple copies were made. The footage revealed PII such as her face, hair color, and skin tone.

No unnecessary surveillance

Most privacy-preserving approaches focus on removing region of interest (ROI) details to “sanitize” personal information from off-device true-color (RBG) images. But these are vulnerable to environmental and lighting effects that can lead to information leakage, the authors said. They developed PrivacyLens to address these issues.

Brenda Ahearn, Michigan Tech

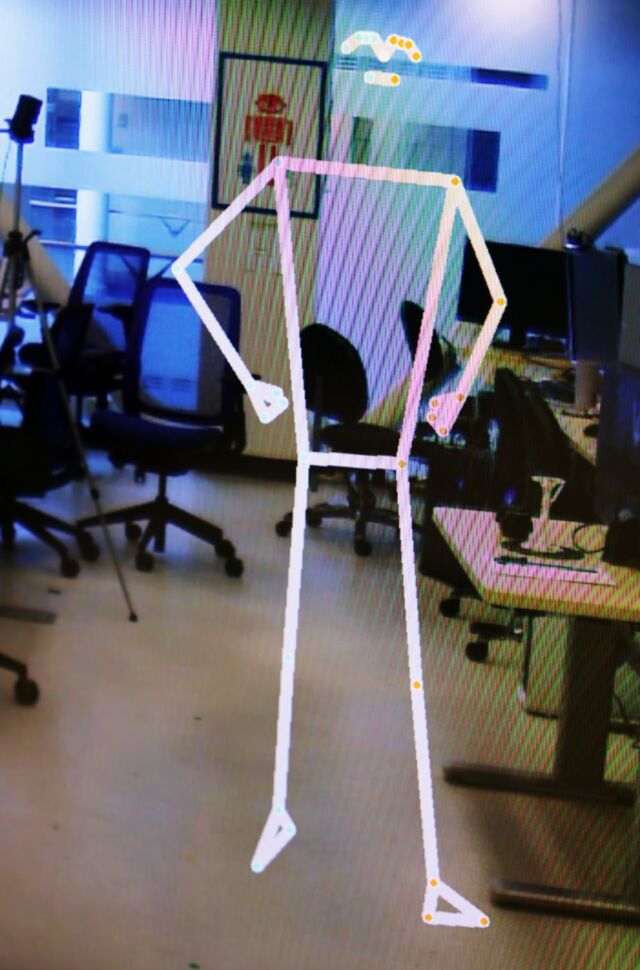

PrivacyLens is battery-powered and combines RGB and thermal imaging with an onboard GPU that can remove PII before any data is stored or sent to a server, specifically face, skin tone, hair color, body shape, and gender characteristics. The thermal imaging means that people in images can be detected and “subtracted” from images based on their thermal silhouettes, replacing them with an animated stick figure. The camera can still function, but the person’s identifying features are protected. A deployment study, conducted in an office atrium, a family home, and an outdoor park, showed that PrivacyLens removed PII in 99.1 percent of images, compared to about 57.6 percent using RGB methods alone.

There are six different operational modes, depending on how much personal information the user wants to remove. For example, someone might be fine with simply swapping facial features for a generic face (Face Swap mode) when they’re in the kitchen at home, and opt for complete removal in the bedroom or bathroom (Ghost mode). That’s consistent with the findings of a small pilot study the team conducted, which also revealed a lower expectation of privacy in public settings and thus less need for the more aggressive operational modes.

“Cameras provide rich information for monitoring health. It can help track exercise habits and other daily activities, or call for help if an elderly person falls,” said co-author Yasha Iravantchi, a U-M graduate student. “But this poses an ethical dilemma for people who would benefit from this technology. Without privacy restrictions, we present a situation where they have to consider giving up their privacy in exchange for good chronic care. This device could allow us to obtain valuable medical data while preserving patient privacy.” PrivacyLens could also prevent autonomous vehicles or outdoor cameras from being used for surveillance in violation of privacy laws.

DOI: Proceedings on Privacy Enhancing Technologies Symposium, 2024. 10.56553/popets-2024-0146 (About DOIs).