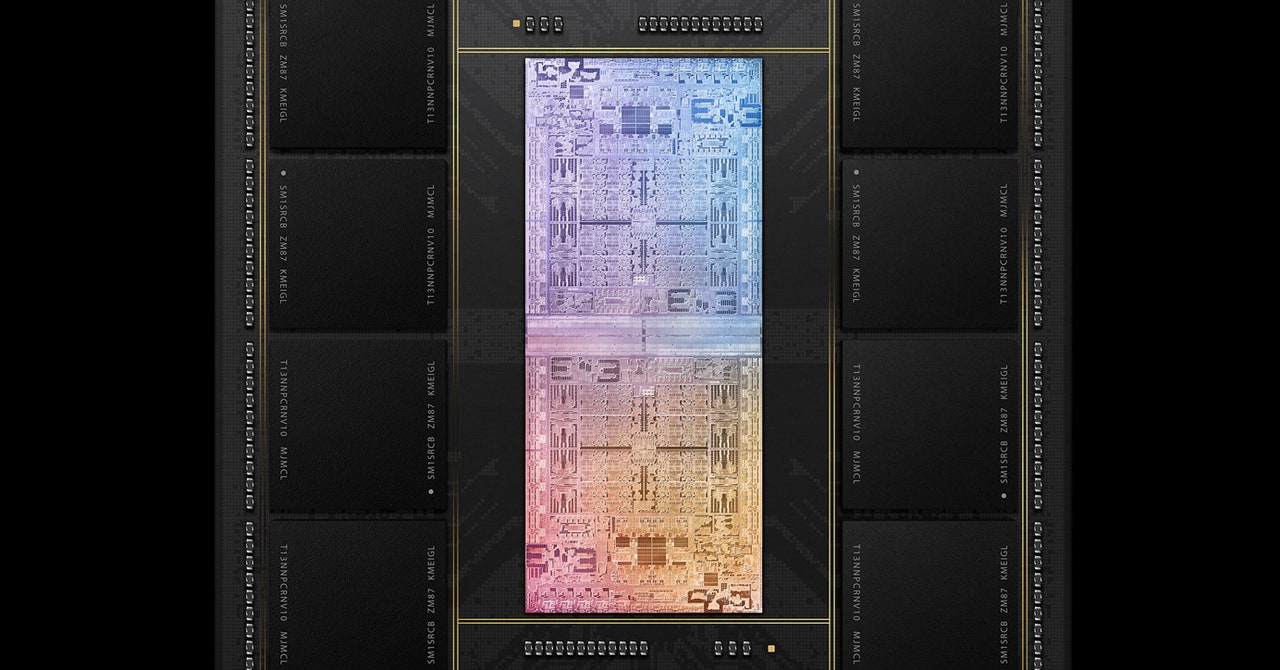

For practical purposes, the M1 Ultra acts like a single, impossibly large slab of silicon that does it all. Apple’s most powerful chip yet has 114 billion transistors packed into more than a hundred processing cores dedicated to logic, graphics and artificial intelligence, all connected to 128 gigabytes of shared memory. But the M1 Ultra is basically a Frankenstein monster, made up of two identical M1 Max chips bolted together using a silicon interface that serves as a bridge. This clever design makes it seem as if the combined chips are really just one bigger whole.

As it becomes more difficult to make transistors smaller and impractical to make individual chips much larger, chipmakers are starting to sew components together to increase processing power. The Lego-like approach is an important way in which the computer industry wants to move forward. And Apple’s M1 Ultra shows that new techniques can deliver major performance gains.

“This technology came at just the right time,” said Tim Millet, Apple’s vice president of hardware technologies. “In a way, it’s about Moore’s Law,” he adds, citing the decades-old axiom, named after Intel co-founder Gordon Moore, that chip performance — measured by the number of transistors on a chip – doubling every 18 months.

It’s no secret that Moore’s Law, which has led to advancements in the computer industry and the economy for decades, is no longer true. Some extremely complex and costly engineering tricks promise to further reduce the size of components etched into silicon chips, but engineers are reaching the physical limit of how small these components, with properties measured in billionths of a meter, can be practical. Even if Moore’s Law is obsolete, computer chips are more important — and ubiquitous — than ever. Advanced silicon is critical to technologies such as AI and 5G, and supply chain disruptions caused by the pandemic have highlighted how essential semiconductors are now for industries such as the automotive industry.

As each new generation of silicon takes a smaller step forward, a growing number of companies have moved towards designing their own chips for performance improvements. Apple has been using special silicon for its iPhones and iPads since 2010 — then in 2020 it announced it would design its own chips for Macs and MacBooks, moving away from Intel’s products. Apple took advantage of the work it did on smartphone chips to develop its desktop chips, which use the same architecture, under license from British company ARM. By making its own silicon and integrating functions normally performed by individual chips into a single system-on-a-chip, Apple has control over the whole of a product and can customize software and hardware together. That level of control is key.

“I realized the whole” [chipmaking] The world was turned upside down,” said Millet, a chip industry veteran who joined Apple in 2005 from Brocade, an American networking company. Unlike, say, Intel, which designs and manufactures chips that are then sold to computer manufacturers, Millet explains that Apple can work on designing a chip for a product along with the software, hardware and industrial design.