In the late 1970s, as a young researcher at Argonne National Laboratory outside Chicago, Jack Dongarra helped write Linpack computer code.

Linpack provided a way to perform complex math on what we now call supercomputers. It became an essential tool for science labs as they pushed the boundaries of what a computer could do. That included forecasting weather patterns, modeling economies and simulating nuclear explosions.

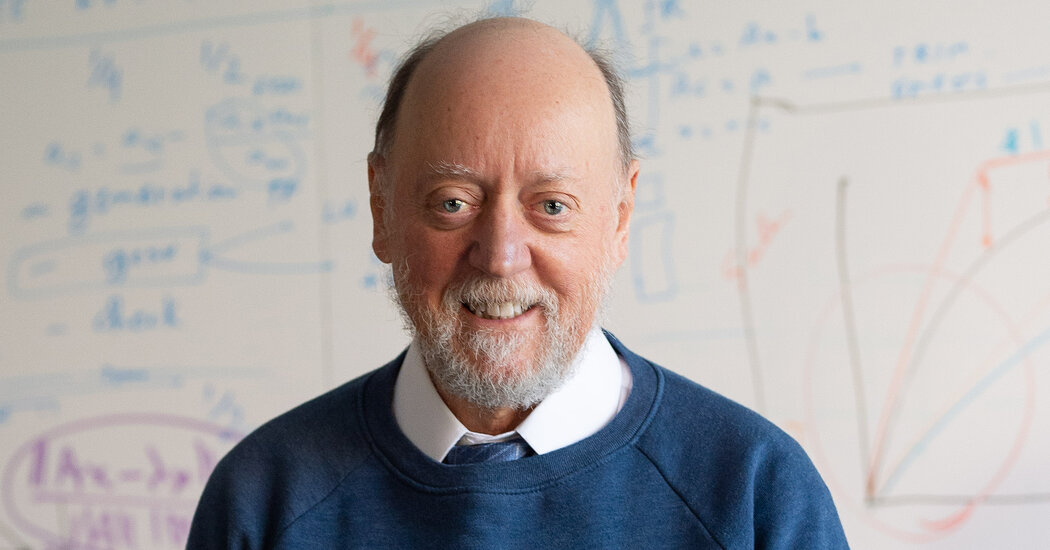

On Wednesday, the Association for Computing Machinery, the world’s largest association of computer professionals, said Dr. Dongarra, 71, would receive this year’s Turing Award for his work on fundamental concepts and code that allowed computer software to keep pace with the hardware in the computer. the world’s most powerful machines. The Turing Award has been given since 1966 and is often referred to as the Nobel Prize in Computer Science and comes with a prize of $1 million.

In the early 1990s, Dr. Dongarra and his collaborators also used the Linpack (short for linear algebra package) code to create a new kind of test that could measure the power of a supercomputer. They focused on how many calculations it could perform with each passing second. This became the primary means of comparing the fastest machines in the world, understanding what they could do and understanding how to change.

“People in science often say, ‘If you can’t measure it, you don’t know what it is,'” said Paul Messina, who oversaw the Energy Department’s Exascale Computing Project, an effort to build software for the best the country’s supercomputers. † “That’s why Jack’s work is important.”

dr. Dongarra, now a professor at the University of Tennessee and researcher at the nearby Oak Ridge National Laboratory, was a young researcher in Chicago when he specialized in linear algebra, a form of mathematics that underlies many of the most ambitious tasks in the world. computer science . That includes everything from computer simulations of climates and economies to artificial intelligence technology intended to mimic the human brain. Developed with researchers from several US labs, Linpack — what’s called a software library — helped researchers perform this math on a wide variety of machines.

“Basically, these are the algorithms you need to solve problems in engineering, physics, science or economics,” said Ewa Deelman, a professor of computer science at the University of Southern California who specializes in software used by supercomputers. . “They let scientists do their job.”

Over the years, as he continued to improve and expand Linpack and adapt the library for new types of machines, Dr. Dongarra also developed algorithms that could increase the power and efficiency of supercomputers. As the hardware in the machines continued to improve, so did the software.

In the early 1990s, scientists couldn’t agree on the best ways to measure the progress of supercomputers. So created Dr. Dongarra and his colleagues developed the Linpack benchmark and began publishing a list of the world’s 500 most powerful machines.

The Top500 list, which is updated and released twice a year, omitting the space between “Top” and “500,” sparked a competition between science labs to see who could build the fastest machine. What started as a bragging rights battle was given an extra edge as labs in Japan and China challenged the traditional strongholds in the United States.

“There’s a direct parallel between how much computing power you have in a country and the types of problems you can solve,” said Dr. partman.

The list is also a way of understanding how technology is evolving. In the 2000s, it turned out that the most powerful supercomputers were those that connected thousands of small computers into one gigantic whole, each equipped with the same kind of computer chips used in desktop PCs and laptops.

In the years that followed, it followed the rise of “cloud computing” services from Amazon, Google and Microsoft, connecting small machines in even greater numbers.

These cloud services are the future of scientific computing as Amazon, Google and other internet giants build new kinds of computer chips that can train AI systems at a speed and efficiency never possible in the past, said Dr. Dongarra in an interview.

“These companies are building chips to fit their own needs, and that will have a big impact,” he said. “We will rely more on cloud computing and eventually give up the ‘big iron’ machines in the national labs today.”

Scientists are also developing a new kind of machine called a quantum computer, which makes today’s machines look like toys by comparison. As the computers of the world continue to evolve, they will need new benchmarks.

“Manufacturers are going to brag about these things,” said Dr. dongarra. “The question is: what is reality?”