Benj Edwards / Stable Spread

On Thursday, AI company Anthropic announced that it has given its ChatGPT-like Claude AI language model the ability to parse an entire book’s material in less than a minute. This new ability comes from expanding Claude’s context window to 100,000 tokens, or about 75,000 words.

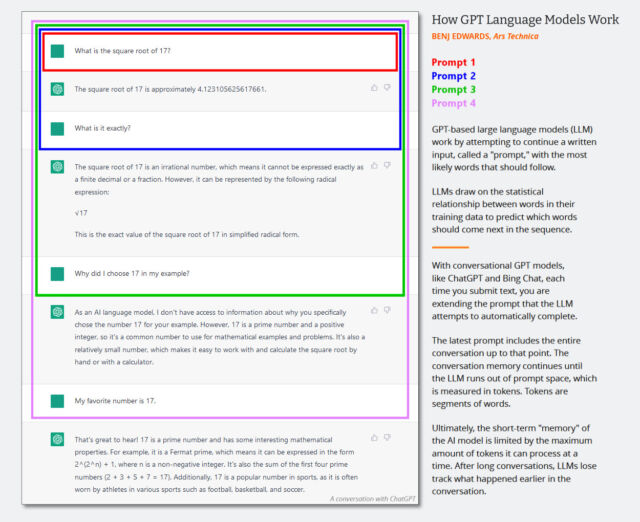

Like OpenAI’s GPT-4, Claude is a large language model (LLM) that works by predicting the next token in a sequence when a given input is given. Tokens are snippets of words used to simplify AI data processing, and a “context window” is like short-term memory: how much human-provided input data an LLM can process at once.

A larger context window means an LLM can consider larger works like books or engage in very long interactive conversations that span “hours or even days,” according to Anthropic:

The average person can read 100,000 tokens of text in ~5+ hours, and then they may need significantly more time to process, remember, and analyze that information. Claude can now do this in less than a minute. For example, we loaded the full text of The Great Gatsby into Claude-Instant (72,000 tokens) and modified a line to say that Mr. Carraway was “a software engineer working on machine learning tooling at Anthropic.” When we asked the model to see what was different, it responded with the correct answer within 22 seconds.

While it may not sound impressive to pick up changes in a text (Microsoft Word can do that, but only if it has two documents to compare), remember that after you have Claude read the text of The Great Gatsby, the AI model can then interactively answer questions about it or analyze its meaning. 100,000 tokens is a big upgrade for LLMs. By comparison, OpenAI’s GPT-4 LLM has context window lengths of 4,096 tokens (approximately 3,000 words) when used as part of ChatGPT and 8,192 or 32,768 tokens via the GPT-4 API (which is currently only available via waitlist).

To understand how a larger context window leads to a longer conversation with a chatbot like ChatGPT or Claude, for a previous article we made a diagram that shows how the size of the prompt (which is held in the context window) increases to increase the full text of the conversation. That means a conversation can last longer before the chatbot loses its “memory” of the conversation.

Benj Edwards / Ars Technica

According to Anthropic, Claude’s enhanced capabilities extend beyond processing books. The enlarged context window can potentially help companies extract important information from multiple documents through a conversational interaction. The company suggests that this approach may outperform vector search-based methods when dealing with complicated queries.

A demo of using Claude as a business analyst provided by Anthropic.

While Anthropic is not as big a name in AI as Microsoft and Google, it has become a notable rival to OpenAI in terms of competitive offerings in LLMs and API access. Former OpenAI VP of Research Dario Amodei and his sister Daniela Founded Anthropic in 2021 after disagreement over OpenAI’s commercial direction. Notably, Anthropic received a $300 million investment from Google in late 2022, with Google acquiring a 10 percent stake in the company.

Anthropic says there are now 100,000 context windows available to users of the Claude API, which is currently limited by a waiting list.