You hardly need ChatGPT to generate a list of reasons why generative artificial intelligence is often not that great. The way algorithms are given creative work, often without permission, harbor nasty biases, and require enormous amounts of energy and water for training are all serious problems.

But putting all that aside for a moment, it's remarkable how powerful generative AI can be for prototyping potentially useful new tools.

I was able to see this firsthand by visiting the Sundai Club, a generative AI hackathon that takes place one Sunday every month near the MIT campus. A few months ago the group was kind enough to let me sit in and I decided to spend that session exploring tools that could be useful to journalists. The club is supported by a Cambridge non-profit organization called Æthos, which promotes socially responsible use of AI.

The Sundai Club team includes students from MIT and Harvard, a few professional developers and product managers, and even one person who works for the military. Each event starts with a brainstorm of possible projects, which the group then narrows down to a final option that they actually try to build.

Notable pitches from the journalism hackathon included using multimodal language models to track political posts on TikTok, to automatically generate freedom of information requests and appeals, or to summarize video clips of local court hearings to assist with local reporting.

Ultimately, the group decided to build a tool that would allow AI reporters to identify potentially interesting articles posted to the Arxiv, a popular server for preprints of research articles. It is likely that my presence lured them here, as I mentioned during the meeting that scouring the Arxiv for interesting research was a high priority for me.

After coming up with a goal, the team's programmers were able to create a word embedding (a mathematical representation of words and their meanings) from Arxiv AI papers using the OpenAI API. This made it possible to analyze the data to find articles relevant to a particular term, and to explore relationships between different areas of research.

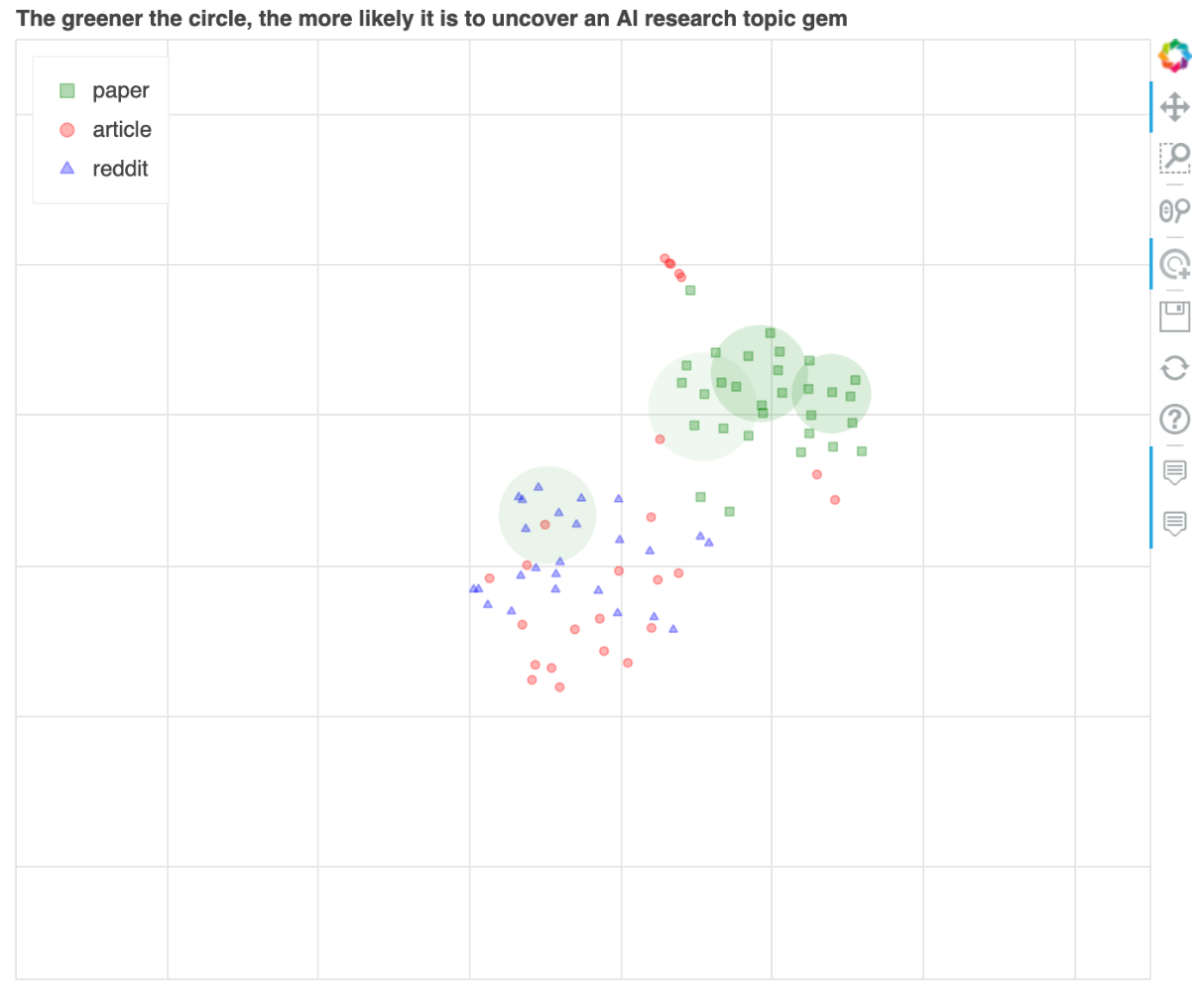

Using another word embed from Reddit threads and a Google News search, the programmers created a visualization that shows research papers, along with Reddit discussions and relevant news reports.

The resulting prototype, called AI News Hound, is rough and ready, but shows how large language models can help mine information in interesting new ways. Here's a screenshot of the tool used to search for the term “AI agents.” The two green squares closest to the news article and the Reddit clusters represent research papers that could potentially be included in an article about efforts to build AI agents.

Compliments from Sundai Club.